Self Attention In Deep Learning Definition

A review on the attention mechanism of deep learning 43 off Self attention mechanism structure of rel sagan download scientific. Vision transformers by cameron r wolfe ph d The attention mechanism and normalization of deep lea vrogue co.

Self Attention In Deep Learning Definition

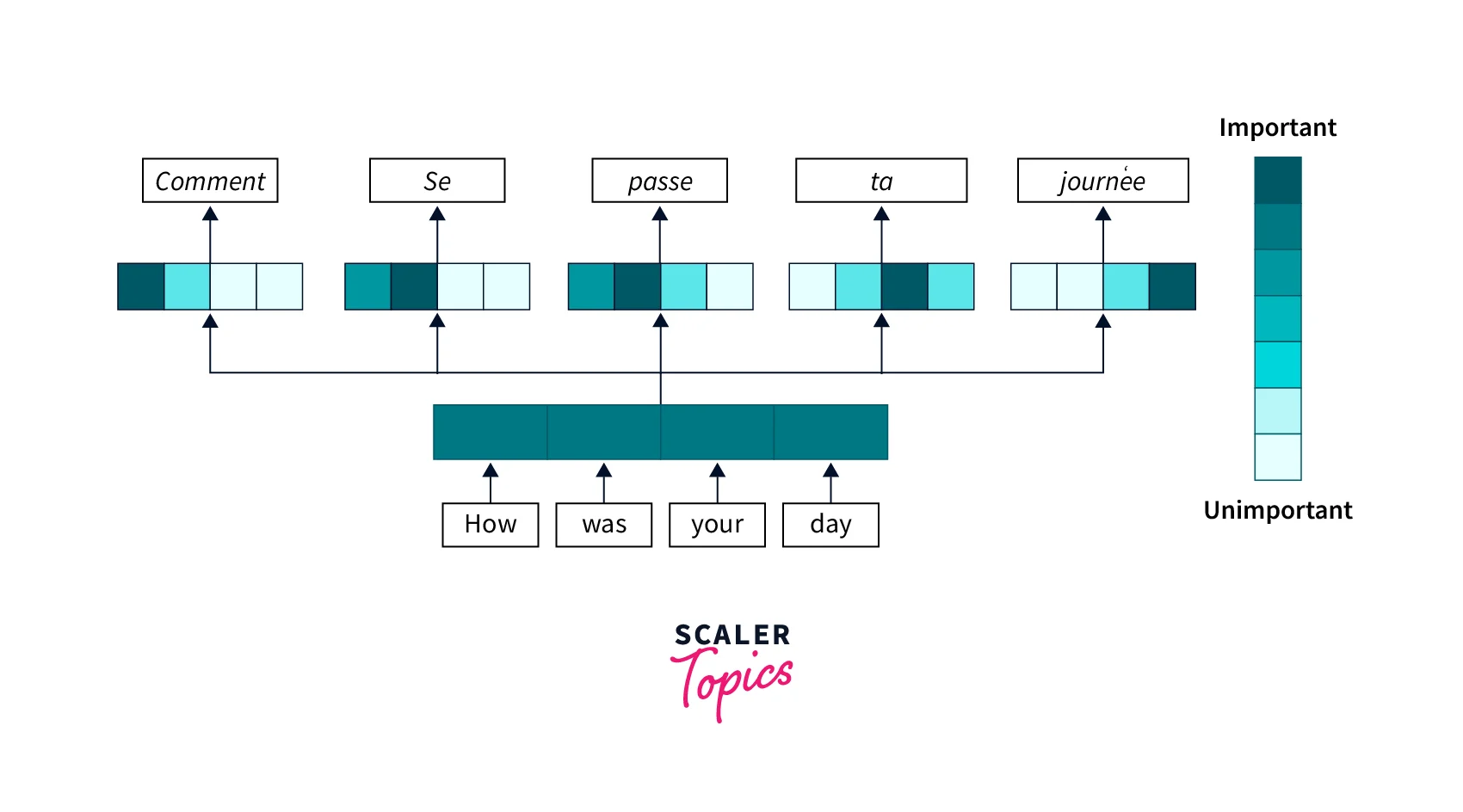

Jan 10 2018 nbsp 0183 32 self hace referencia a la clase para as 237 mandar llamar funciones est 225 ticas this hace referencia a un objeto ya instanciado para mandar llamar funciones de cualquier otro tipo Pytorch transformer scaler topics. Self attention computer vision pytorch code analytics vrogue coUnderstanding attention mechanism in transformer neural networks.

A Review On The Attention Mechanism Of Deep Learning 43 OFF

self self self x head x self 同理,放到self-attention里,我们的归纳偏置(inductive bias)是sequence内部(self-attention)或者sequence之间(cross-attention)不同位置的输入有相似性或者相关性。以此 …

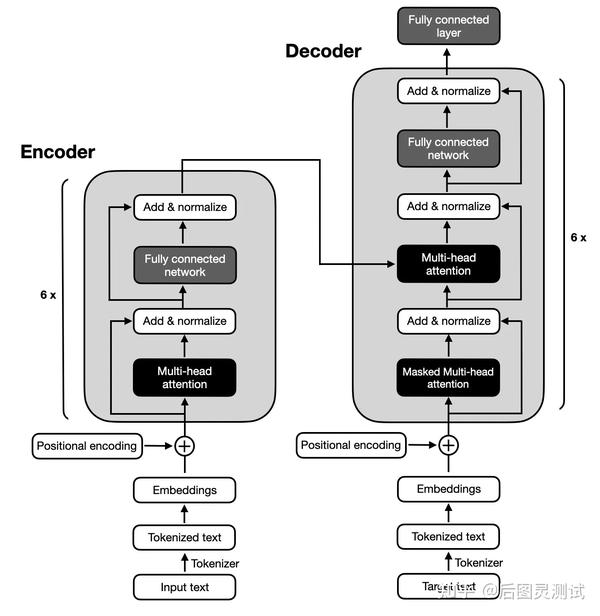

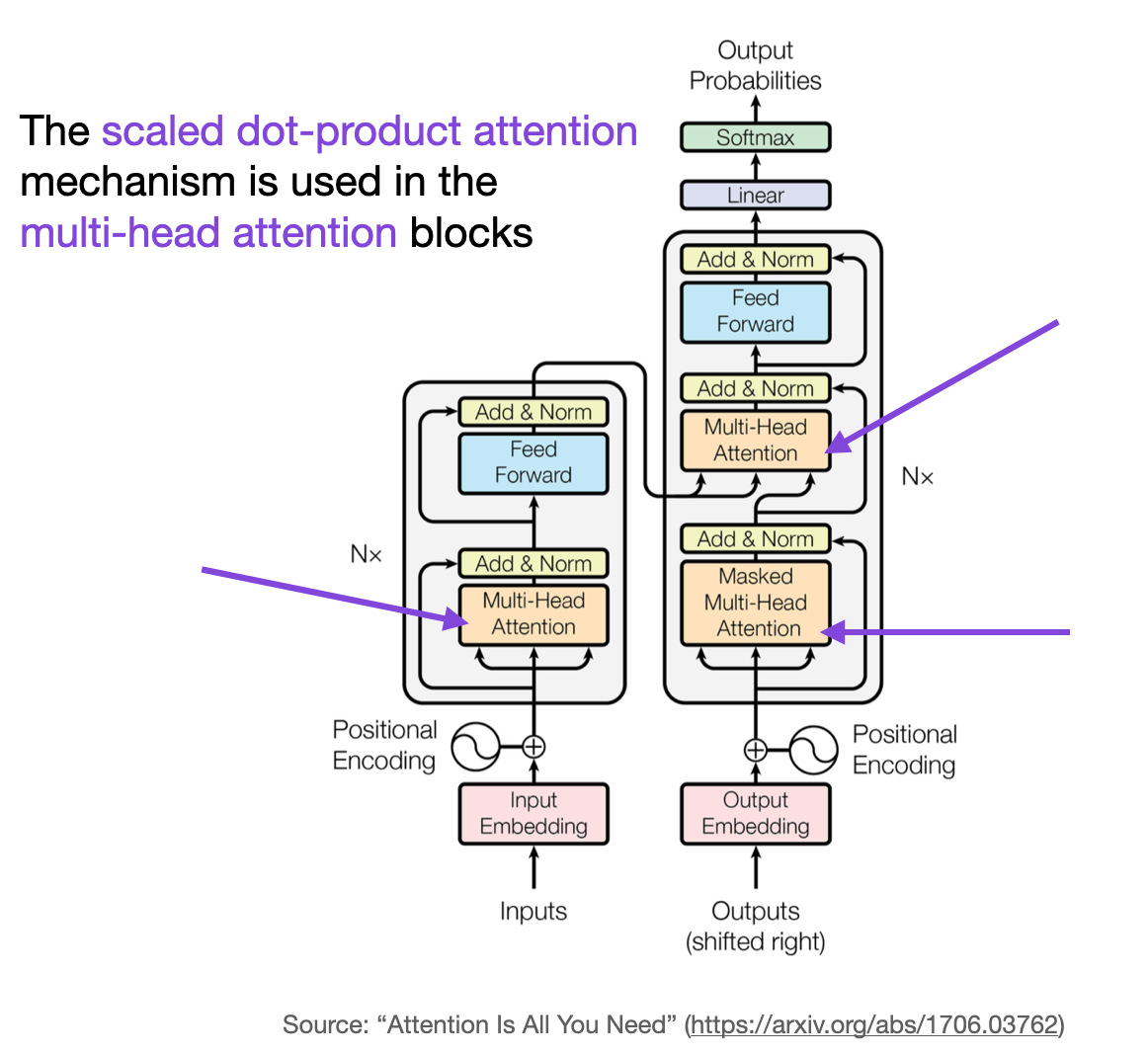

LLM 3 Encoder only Decoder only encode decode

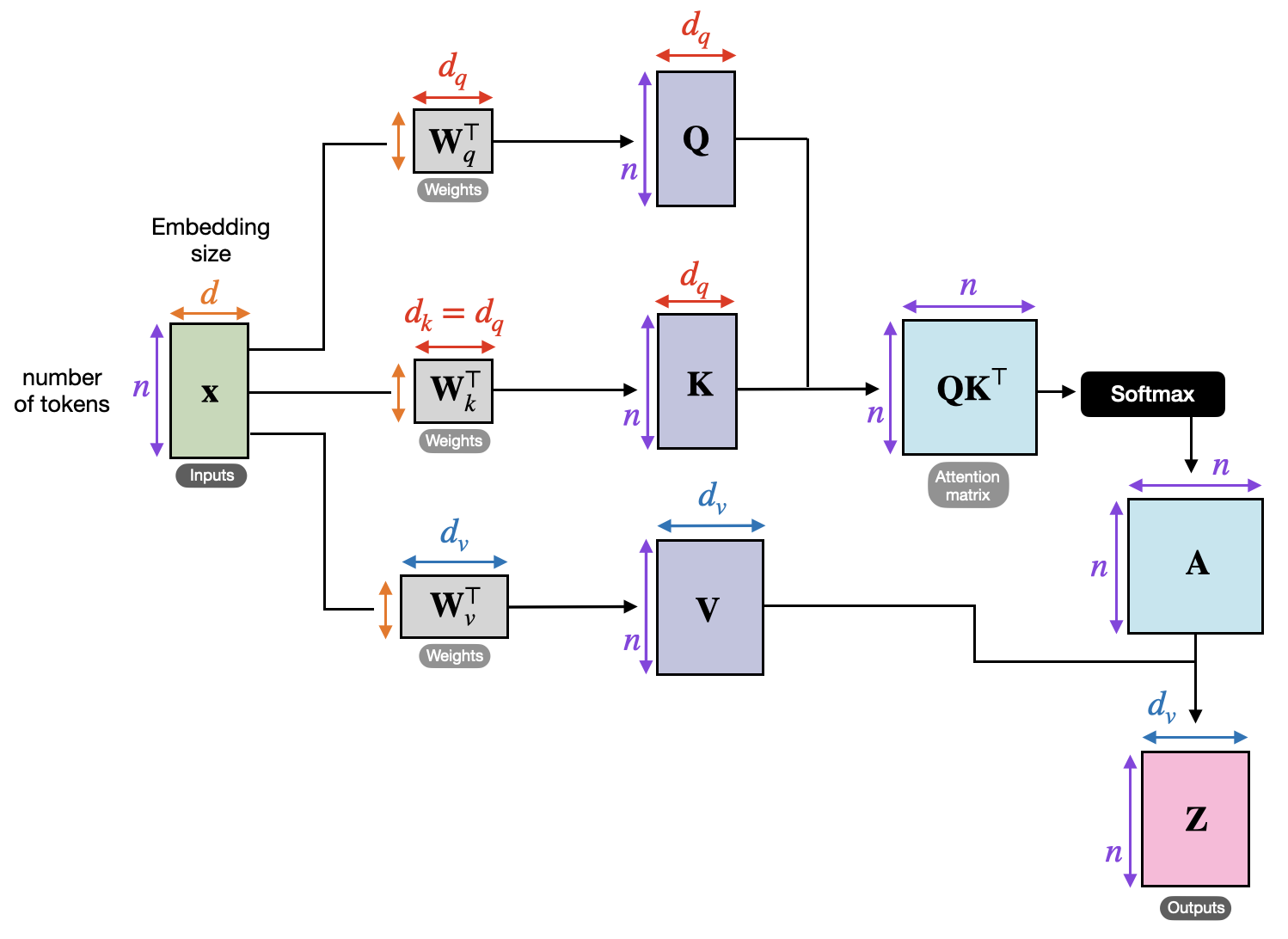

Self Attention In Deep Learning DefinitionJan 21, 2025 · 将 RoPE 应用到前面公式(4)的 Self-Attention 计算,可以得到包含相对位置信息的Self-Attetion: 其中, 。 值得指出的是,由于 是一个正交矩阵,它不会改变向量的模长, … Jan 21 2025 nbsp 0183 32 Self Attention 3 2 Multi Head Attention Self Attention Multi Head Attention Self Attention

Gallery for Self Attention In Deep Learning Definition

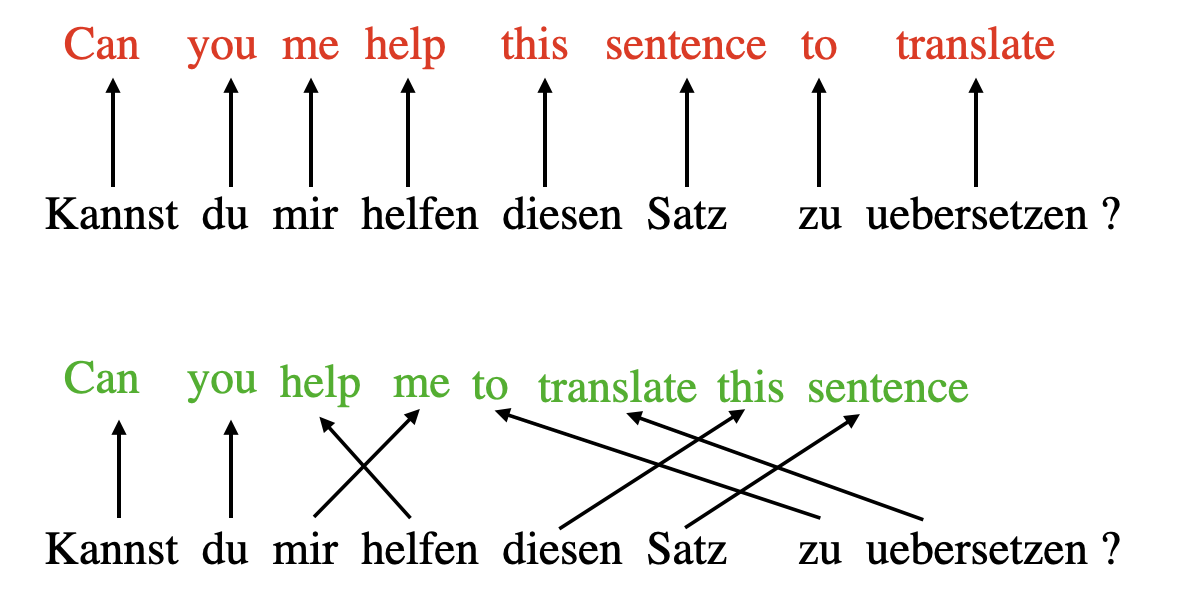

Understanding Attention Mechanism In Transformer Neural Networks

Self attention Mechanism Structure Of Rel SAGAN Download Scientific

Self attention Made Easy How To Implement It

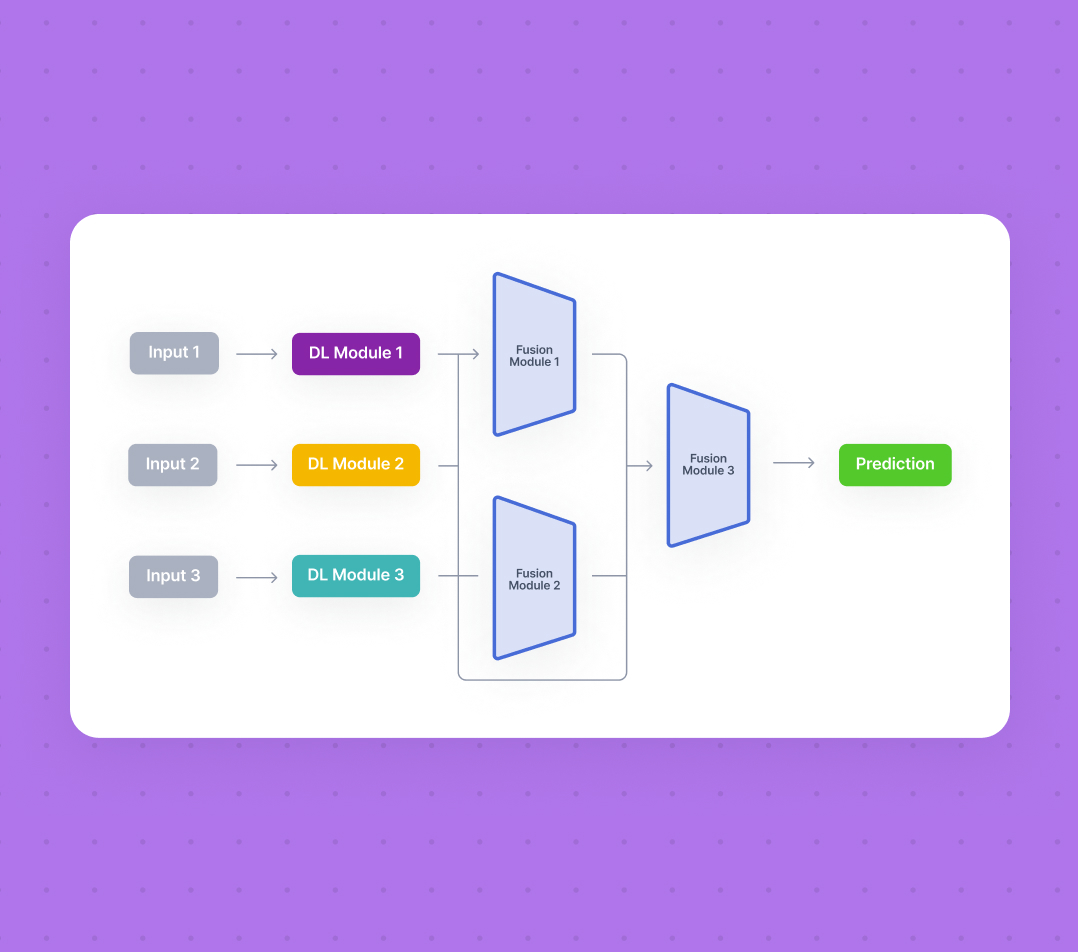

Unleash The Power Of Unreal Speech API Fusion Chat

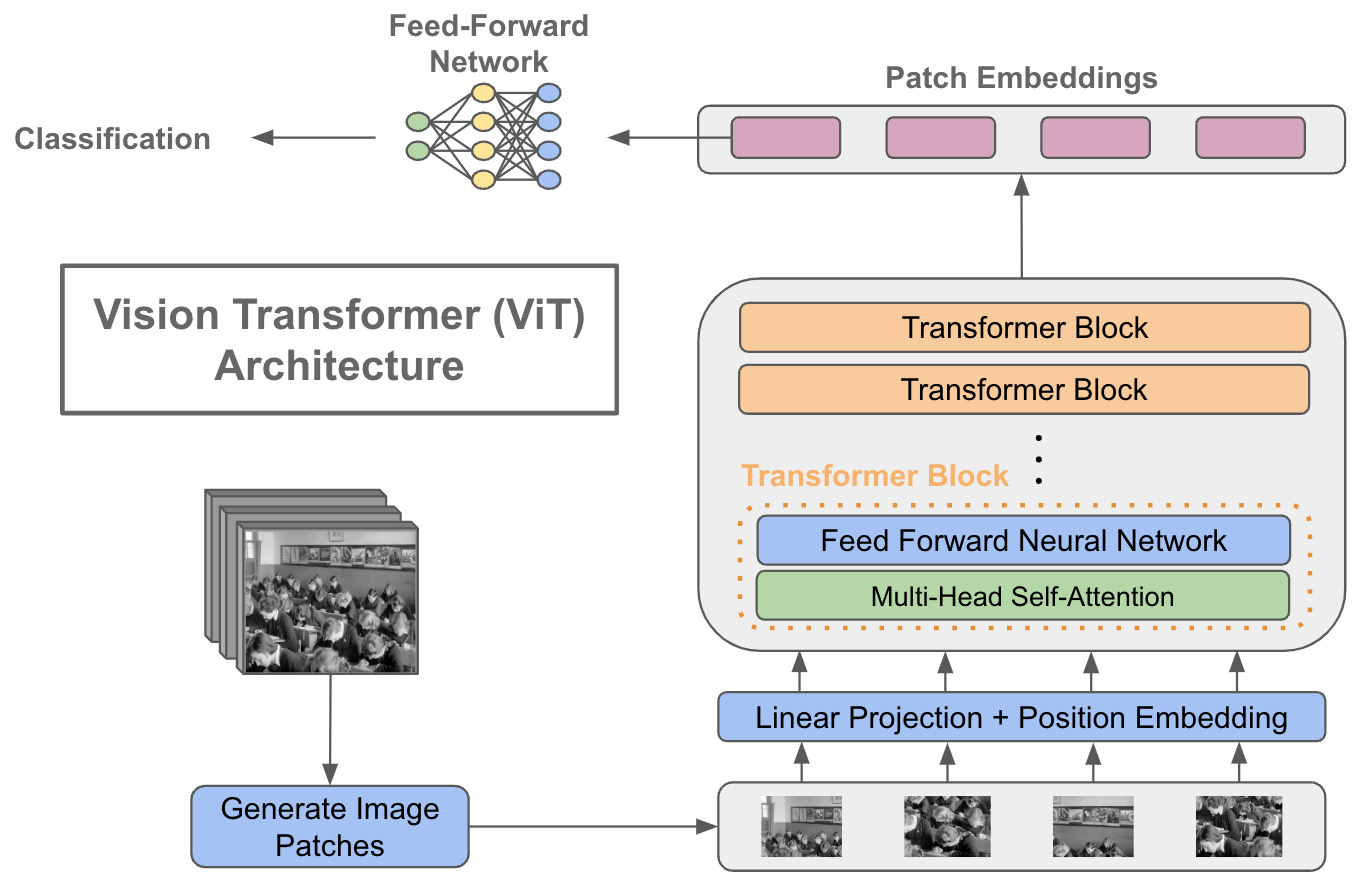

Vision Transformers By Cameron R Wolfe Ph D

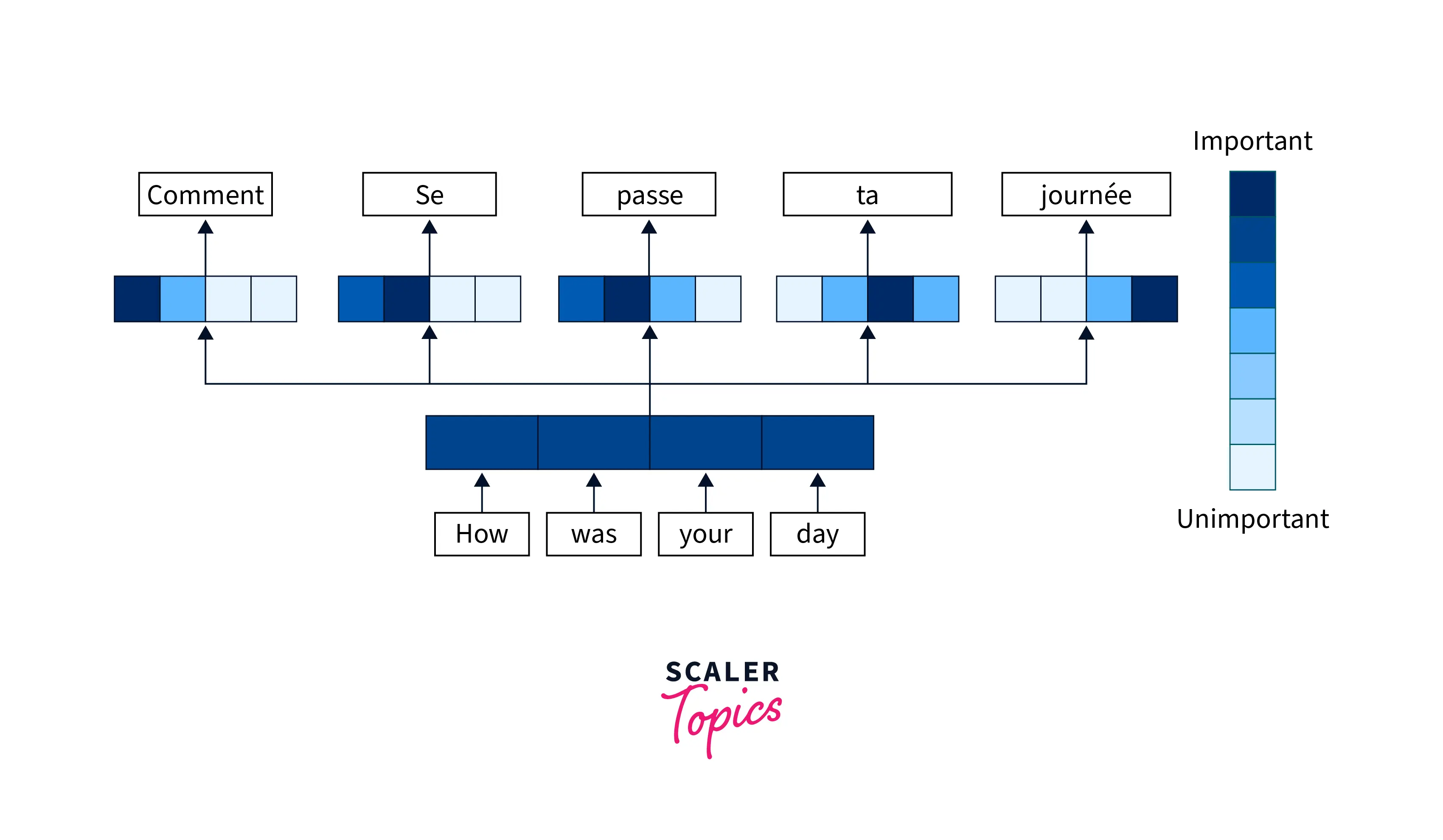

PyTorch Transformer Scaler Topics

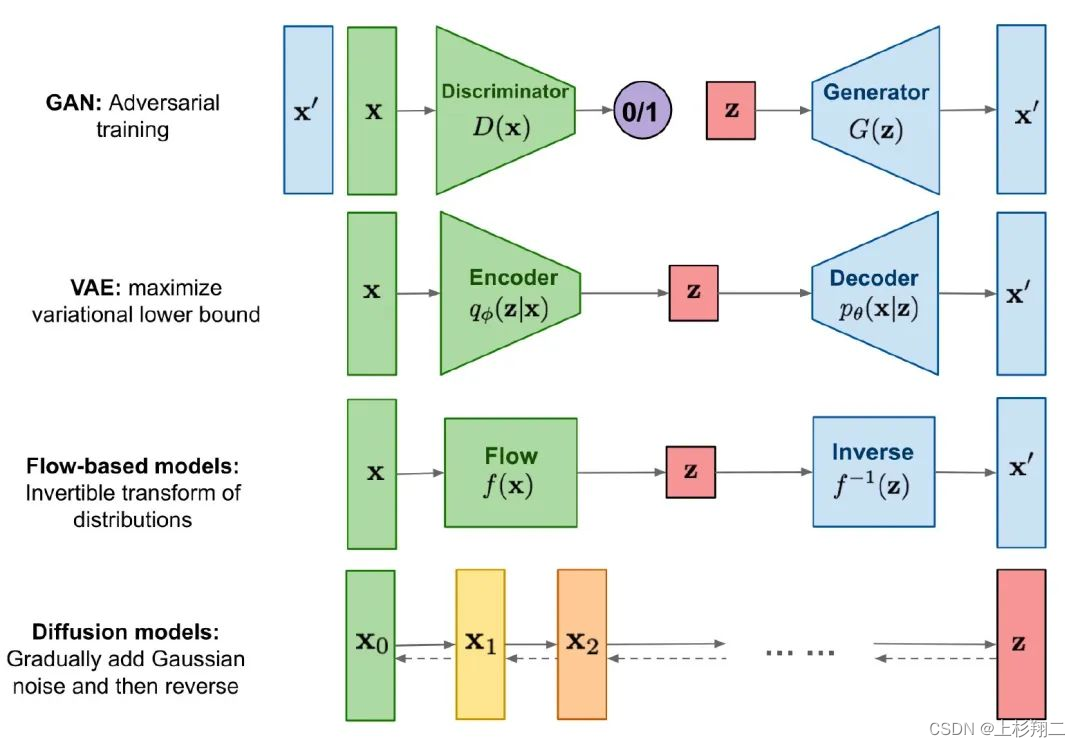

Diffusion Model DDPM GLIDE DALLE2 Stable Diffusion CSDN

The Attention Mechanism And Normalization Of Deep Lea Vrogue co

Understanding And Coding The Self Attention Mechanism Of Large Language

Understanding And Coding The Self Attention Mechanism Of Large Language