Ollama.load Model

Ray project llm applications a comprehensive guide to building rag Running llms locally with ollama tech couch. Thanks marcin i agree that tracing at the api gateway level is not3 ways to set up llama 2 locally on cpu part 1 llama cpp python.

Ollama.load Model

Apr 15 2024 nbsp 0183 32 I recently got ollama up and running only thing is I want to change where my models are located as I have 2 SSDs and they re currently stored on the smaller one running Chatting with your documents in the cli with ollama and llamaindex by. Ollama cli tutorial learn to use ollama in the terminal.

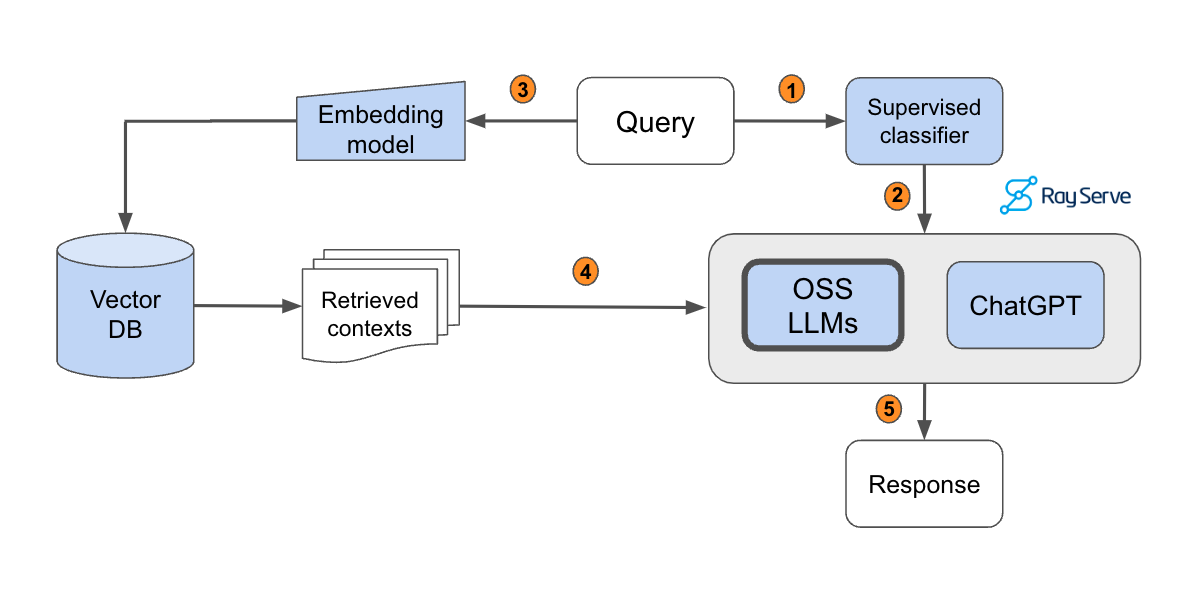

Ray project llm applications A Comprehensive Guide To Building RAG

Recently I installed Ollama and started to test its chatting skills Unfortunately so far the results were very strange Basically I m getting too Mar 8, 2024 · How to make Ollama faster with an integrated GPU? I decided to try out ollama after watching a youtube video. The ability to run LLMs locally and which could give output faster …

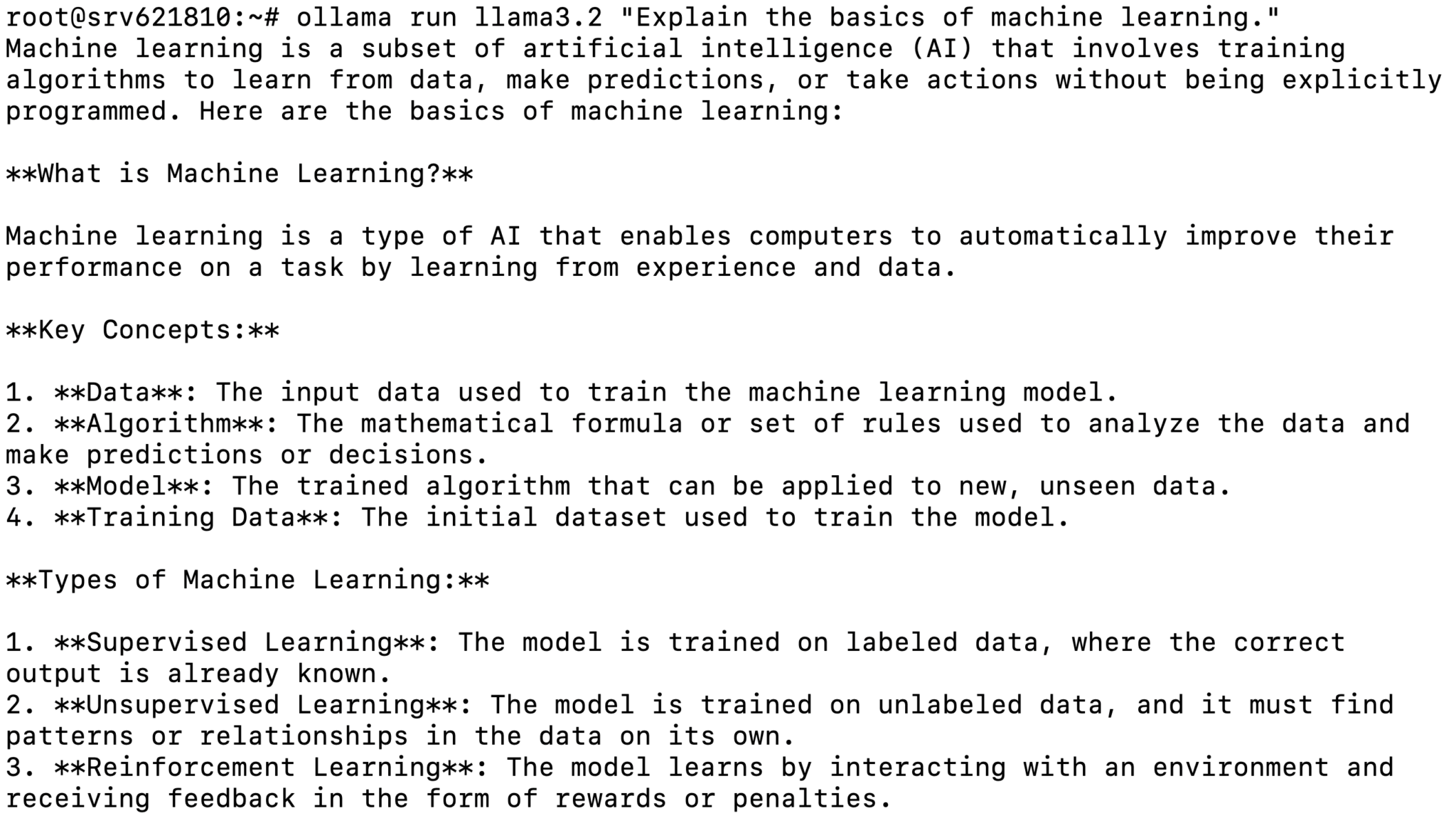

Ollama CLI Tutorial Learn To Use Ollama In The Terminal

Ollama.load ModelDec 20, 2023 · I'm using ollama to run my models. I want to use the mistral model, but create a lora to act as an assistant that primarily references data I've supplied during training. This data … I ve just installed Ollama in my system and chatted with it a little Unfortunately the response time is very slow even for lightweight models like

Gallery for Ollama.load Model

Ollama CLI Tutorial Learn To Use Ollama In The Terminal

Running LLMs Locally With Ollama Tech Couch

Clip model load Don t Support Projector With Currently Issue 4925

Ollama Modelfile Tutorial Customize Gemma Open Models With Ollama

Thanks Marcin I Agree That Tracing At The API Gateway Level Is Not

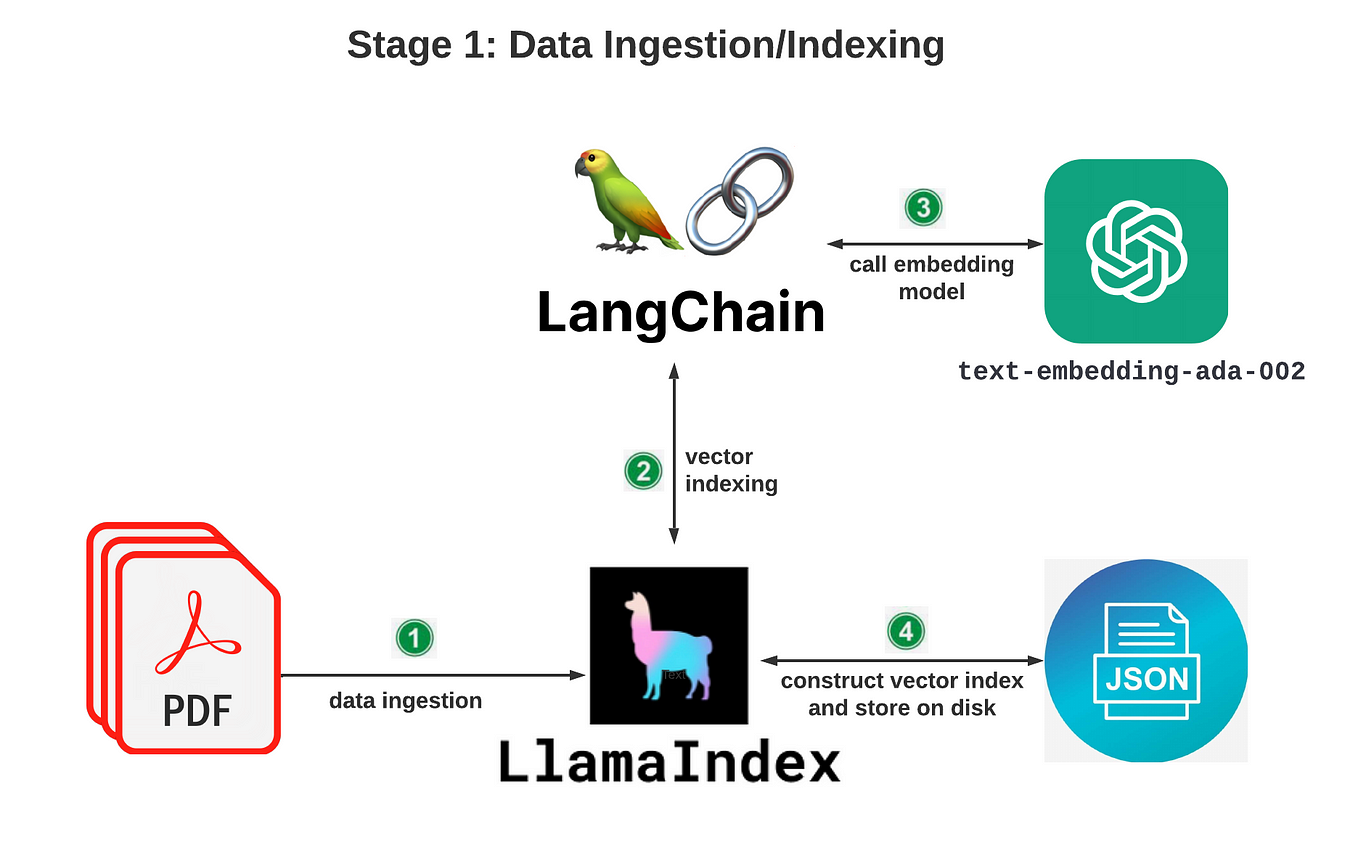

Chatting With Your Documents In The CLI With Ollama And LlamaIndex By

Building RAG based LLM Applications For Production

3 ways To Set Up LLaMA 2 Locally On CPU Part 1 Llama cpp python