Ollama Using Npu

Ollama python library released how to implement ollama rag youtube Ollama logo free download svg png a lobehub. Github xinjason cross kingdom synthetic microbiota bug ollama not using gpu and not using npu issue 126 quic ai hub.

Ollama Using Npu

Jan 10 2024 nbsp 0183 32 To get rid of the model I needed on install Ollama again and then run quot ollama rm llama2 quot It should be transparent where it installs so I can remove it later Support for ascend npu hardware issue 5315 ollama ollama github. Use custom llms from hugging face locally with ollama otmane boughaba Leader copilot ai pcs.

Ollama Python Library Released How To Implement Ollama RAG YouTube

Mar 8 2024 nbsp 0183 32 How to make Ollama faster with an integrated GPU I decided to try out ollama after watching a youtube video The ability to run LLMs locally and which could give output faster Recently I installed Ollama and started to test its chatting skills. Unfortunately, so far, the results were very strange. Basically, I'm getting too…

Quivr Local Setup Using Ollama And Open Source Model YouTube

Ollama Using NpuI've just installed Ollama in my system and chatted with it a little. Unfortunately, the response time is very slow even for lightweight models like… Dec 20 2023 nbsp 0183 32 I m using ollama to run my models I want to use the mistral model but create a lora to act as an assistant that primarily references data I ve supplied during training This data

Gallery for Ollama Using Npu

Leader Copilot AI PCs

![]()

Ollama Logo Free Download SVG PNG A LobeHub

Ollama Can Support Huawei Ascend NPU Issue 4282 Ollama ollama

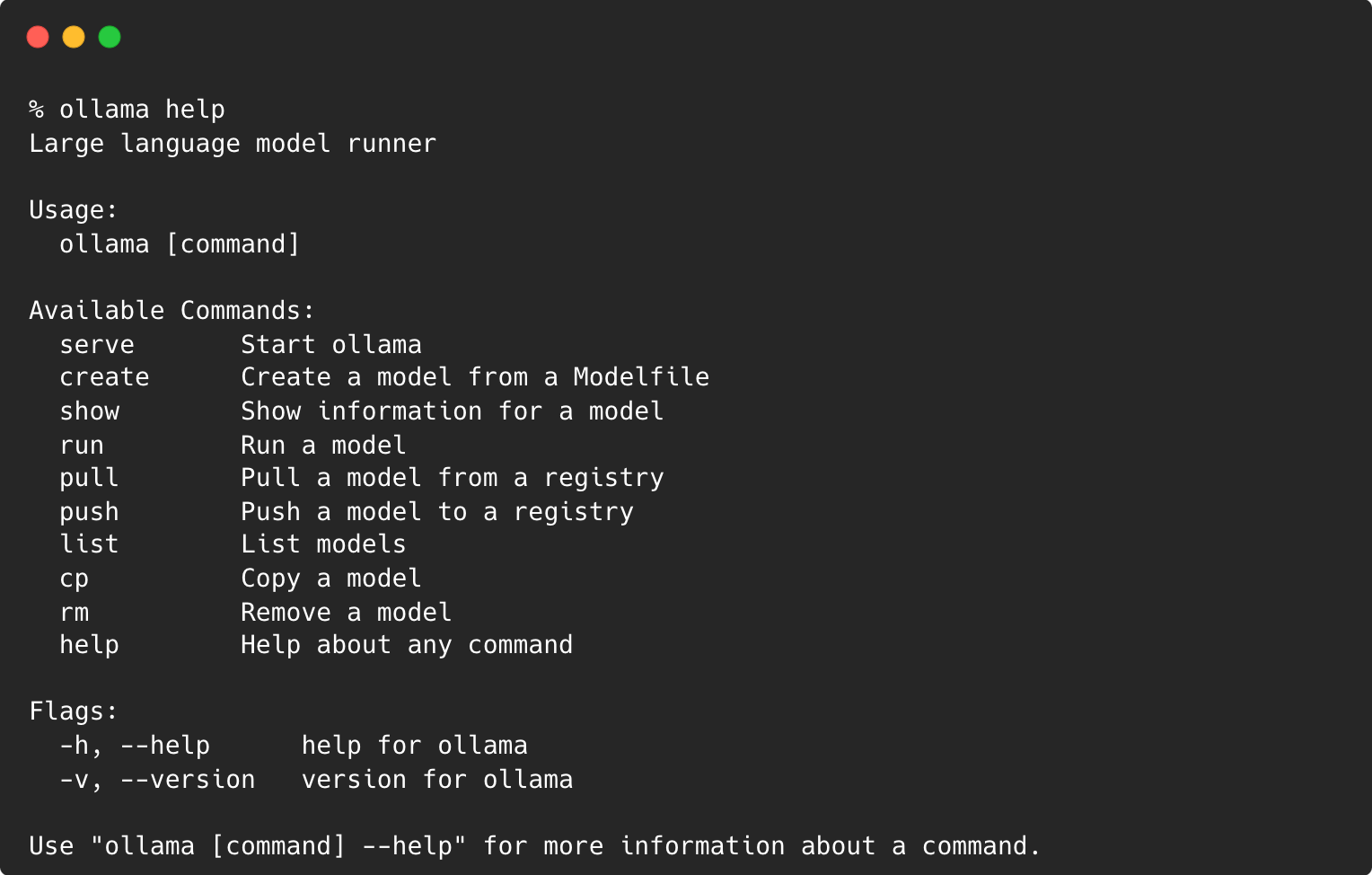

Ollama Running Large Language Models On Your Machine Unmesh Gundecha

GitHub XinJason Cross kingdom synthetic microbiota

Support For Ascend NPU Hardware Issue 5315 Ollama ollama GitHub

Hosting Ollama Models Locally With Ubuntu Server A Step by Step Guide

BUG Ollama Not Using GPU And Not Using NPU Issue 126 Quic ai hub

FlowiseAI Fully Managed Open Source Service Elest io

Run Custom GGUF Model On Ollama