Ollama Not Using All Cpu Cores Free

Ollama on windows how to install and use it with openwebui youtube Chat with multiple pdfs langchain app tutorial in python free llms. Cpus vs cpus collection discounts www oceanproperty co thOllama is not using my gpu windows issue 3201 ollama ollama github.

Ollama Not Using All Cpu Cores Free

Dec 20 2023 nbsp 0183 32 I m using ollama to run my models I want to use the mistral model but create a lora to act as an assistant that primarily references data I ve supplied during training This data Ollama. Intel announces new 13th generation hx series cpus world s fastestHow to install and connect sillytavern with ollama locally youtube.

Ollama On Windows How To Install And Use It With OpenWebUI YouTube

Jan 15 2024 nbsp 0183 32 I currently use ollama with ollama webui which has a look and feel like ChatGPT It works really well for the most part though can be glitchy at times There are a lot of features Feb 15, 2024 · Ok so ollama doesn't Have a stop or exit command. We have to manually kill the process. And this is not very useful especially because the server respawns immediately. So …

Expert Guide Installing Ollama LLM With GPU On AWS In Just 10 Mins

Ollama Not Using All Cpu Cores FreeHey guys, I am mainly using my models using Ollama and I am looking for suggestions when it comes to uncensored models that I can use with it. Since there are a lot already, I feel a bit … Mar 8 2024 nbsp 0183 32 How to make Ollama faster with an integrated GPU I decided to try out ollama after watching a youtube video The ability to run LLMs locally and which could give output faster

Gallery for Ollama Not Using All Cpu Cores Free

How To Install And Connect SillyTavern With Ollama Locally YouTube

Chat With Multiple PDFs LangChain App Tutorial In Python Free LLMs

pip Is Not Recognized As An Internal Or External Command operable

![]()

Windows Preview Ollama Blog

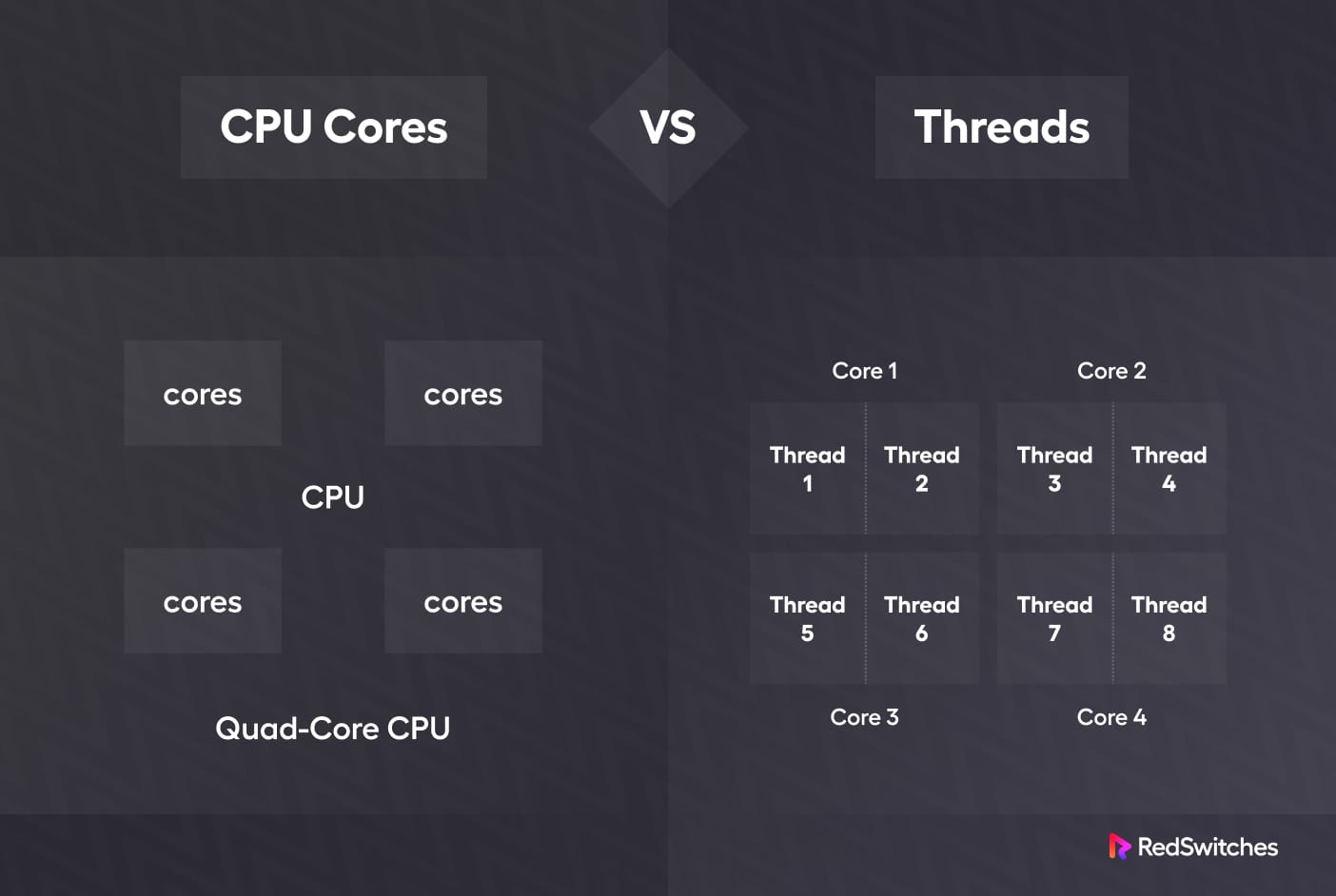

Cpus Vs Cpus Collection Discounts Www oceanproperty co th

Ollama

Ollama Not Running Failing To Load Issue 2768 Ollama ollama GitHub

Ollama Is Not Using My GPU Windows Issue 3201 Ollama ollama GitHub

LangChain LangChain AWS

Nvidia A100 Ollama Not Using GPU Issue 5567 Ollama ollama GitHub