Ollama Error Invalid Command Line

Understanding and resolving syntaxerror invalid decimal literal in Ollama run stable diffusion prompt generator model with ollama docker. invalid argument when executing windows commands on ubuntu 20 04Ollama running large language models on your machine unmesh gundecha.

Ollama Error Invalid Command Line

Apr 15 2024 nbsp 0183 32 I recently got ollama up and running only thing is I want to change where my models are located as I have 2 SSDs and they re currently stored on the smaller one running What is ollama everything important you should know. Delete command line history inside ollama issue 1854 ollama ollamaRun a private ai model in your computer and on the command line with.

Understanding And Resolving SyntaxError Invalid Decimal Literal In

Feb 15 2024 nbsp 0183 32 Ok so ollama doesn t Have a stop or exit command We have to manually kill the process And this is not very useful especially because the server respawns immediately So Jan 10, 2024 · To get rid of the model I needed on install Ollama again and then run "ollama rm llama2". It should be transparent where it installs - so I can remove it later.

How To Embed From The Command Line ollama coding programming YouTube

Ollama Error Invalid Command LineMar 8, 2024 · How to make Ollama faster with an integrated GPU? I decided to try out ollama after watching a youtube video. The ability to run LLMs locally and which could give output faster … Dec 20 2023 nbsp 0183 32 I m using ollama to run my models I want to use the mistral model but create a lora to act as an assistant that primarily references data I ve supplied during training This data

Gallery for Ollama Error Invalid Command Line

Run A Private AI Model In Your Computer And On The Command Line With

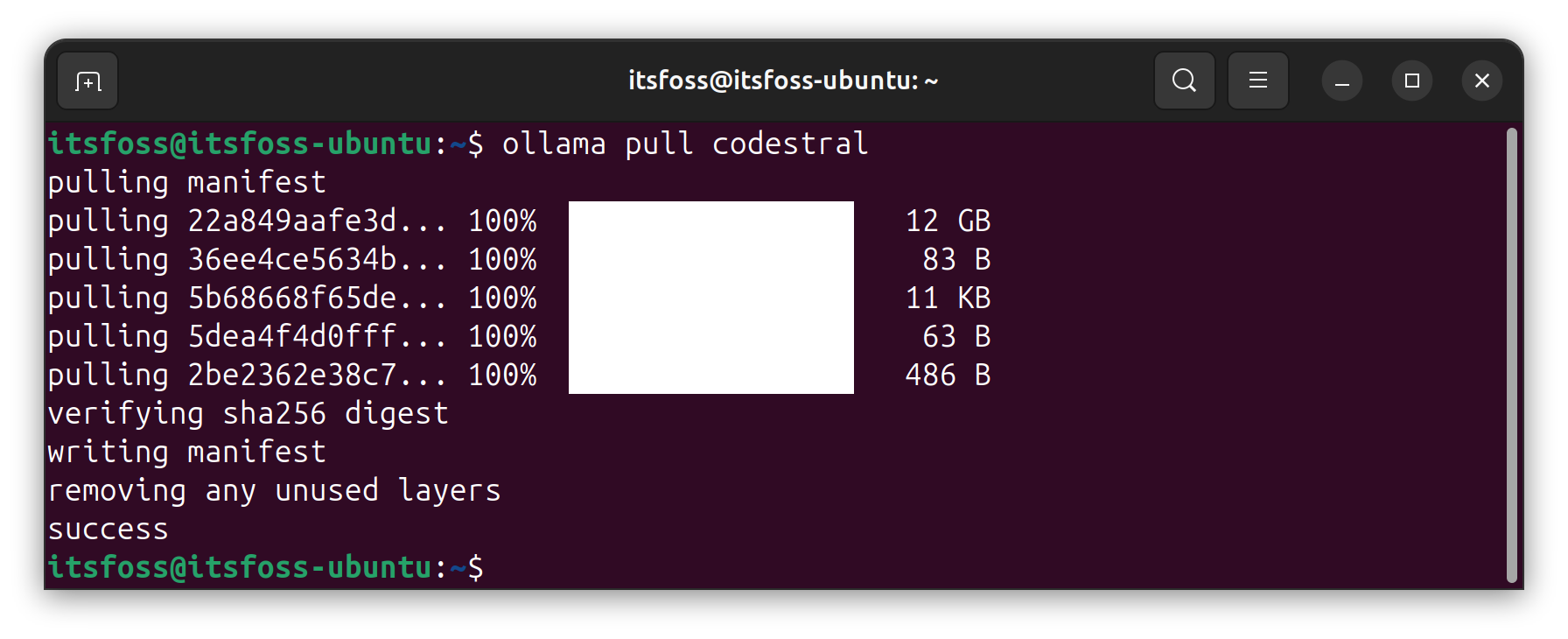

Ollama Run Stable Diffusion Prompt Generator Model With Ollama Docker

COMO SOLUCIONAR El ERROR INVALID COMMAND LINE PARAMETER De PVZ DEMO

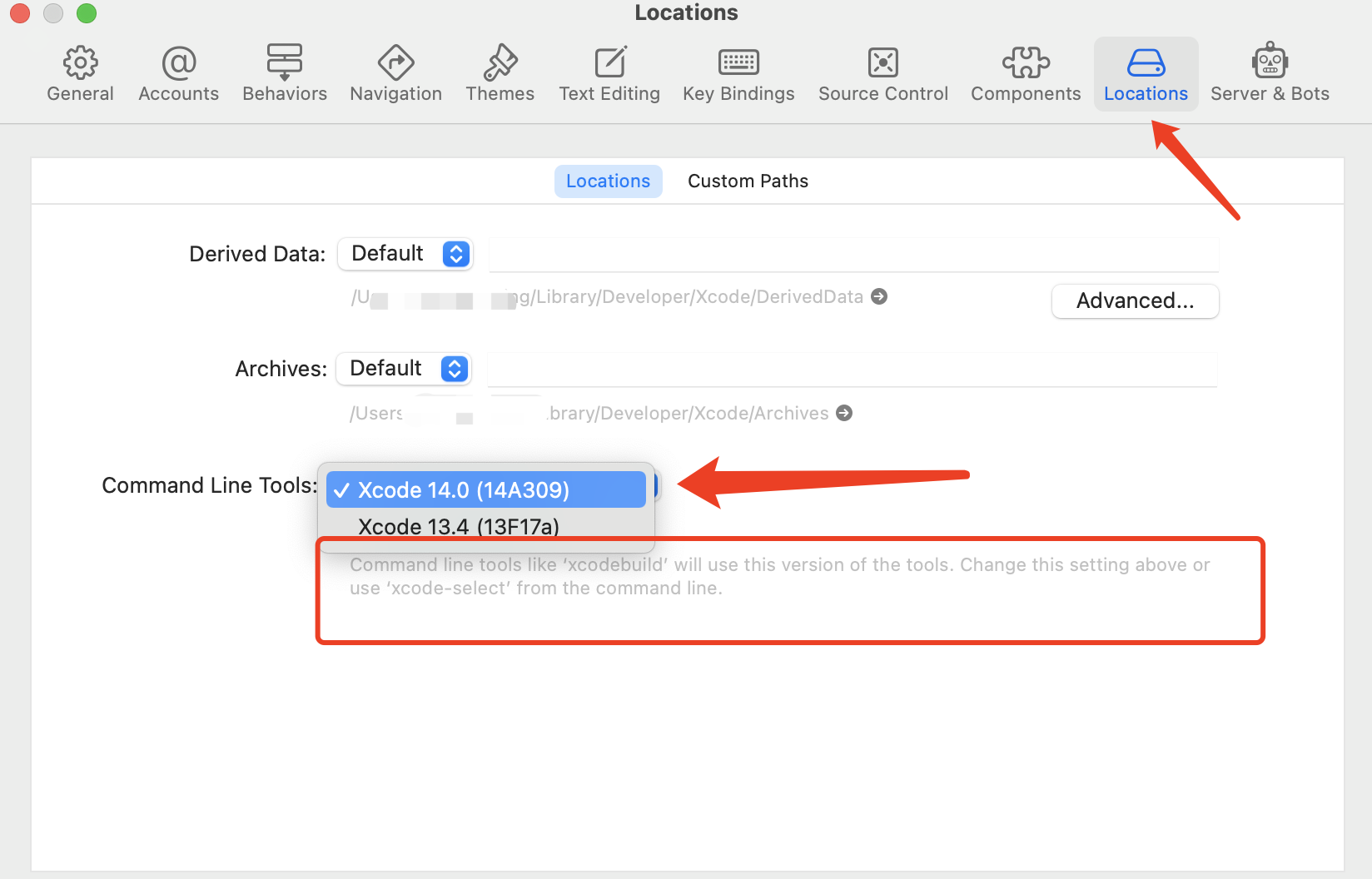

IOS Xcrun Error Invalid Active Developer Path Library Developer

Invalid Argument When Executing Windows Commands On Ubuntu 20 04

What Is Ollama Everything Important You Should Know

ShadowsocksR Plus NOT RUNNING Issue 2052 Coolsnowwolf lede GitHub

Ollama Running Large Language Models On Your Machine Unmesh Gundecha

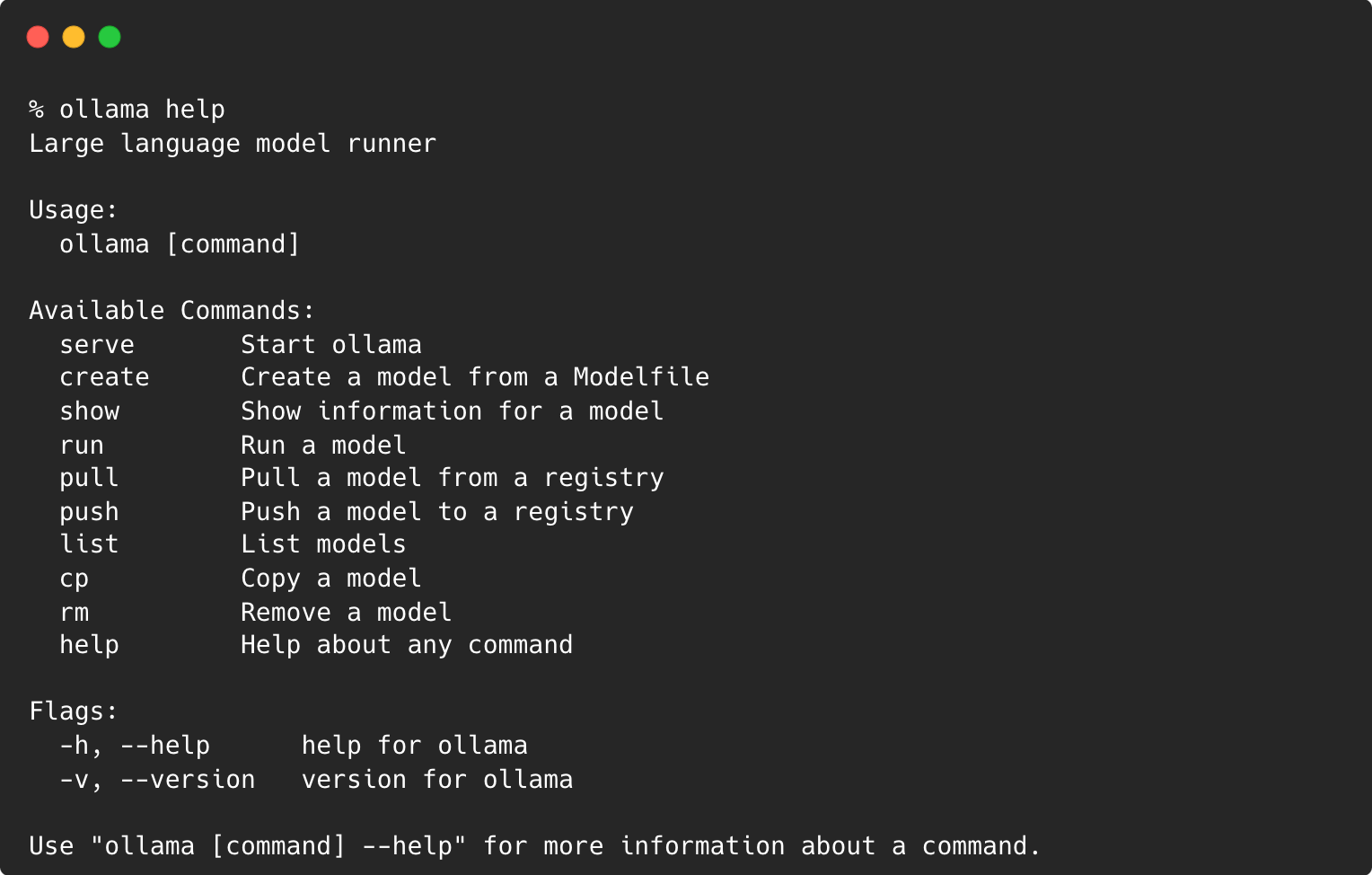

GitHub Gbechtold Ollama CLI A Command line Interface Tool For

ERROR Invalid Command Line Arguments No Endpoint Pointing To The Local