Ollama Docker Gpu Wsl Standings

Ollama self hosted ai chat with llama 2 code llama and more in docker Github coela oss intel gpu wsl advisor a powershell tool that. 2023 wsl docker gpu tensorflow gpu pytorch gpu 2023 wsl docker gpu tensorflow gpu pytorch gpu .

Ollama Docker Gpu Wsl Standings

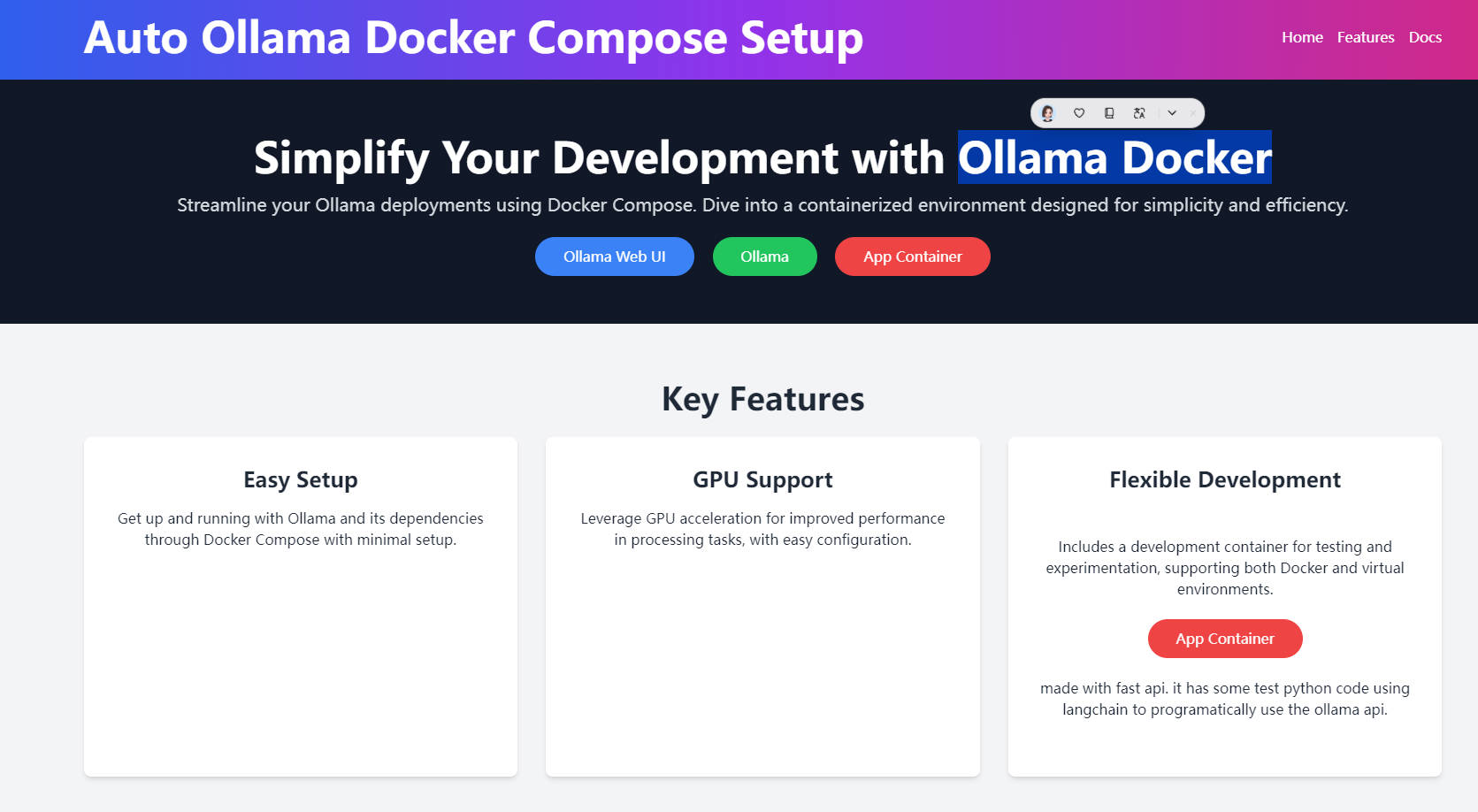

Jan 15 2024 nbsp 0183 32 I currently use ollama with ollama webui which has a look and feel like ChatGPT It works really well for the most part though can be glitchy at times There are a lot of features 2023 wsl docker gpu tensorflow gpu pytorch gpu . 2023 wsl docker gpu tensorflow gpu pytorch gpu Ollama docker gpu docker ai aitoolnet.

Ollama Self Hosted AI Chat With Llama 2 Code Llama And More In Docker

Mar 8 2024 nbsp 0183 32 How to make Ollama faster with an integrated GPU I decided to try out ollama after watching a youtube video The ability to run LLMs locally and which could give output faster Jan 10, 2024 · To get rid of the model I needed on install Ollama again and then run "ollama rm llama2". It should be transparent where it installs - so I can remove it later.

Ollama Run Build And Share LLMs Guidady

Ollama Docker Gpu Wsl StandingsI've just installed Ollama in my system and chatted with it a little. Unfortunately, the response time is very slow even for lightweight models like… Dec 20 2023 nbsp 0183 32 I m using ollama to run my models I want to use the mistral model but create a lora to act as an assistant that primarily references data I ve supplied during training This data

Gallery for Ollama Docker Gpu Wsl Standings

Ollama Docker GPU Docker AI Aitoolnet

GitHub Coela oss intel gpu wsl advisor A PowerShell Tool That

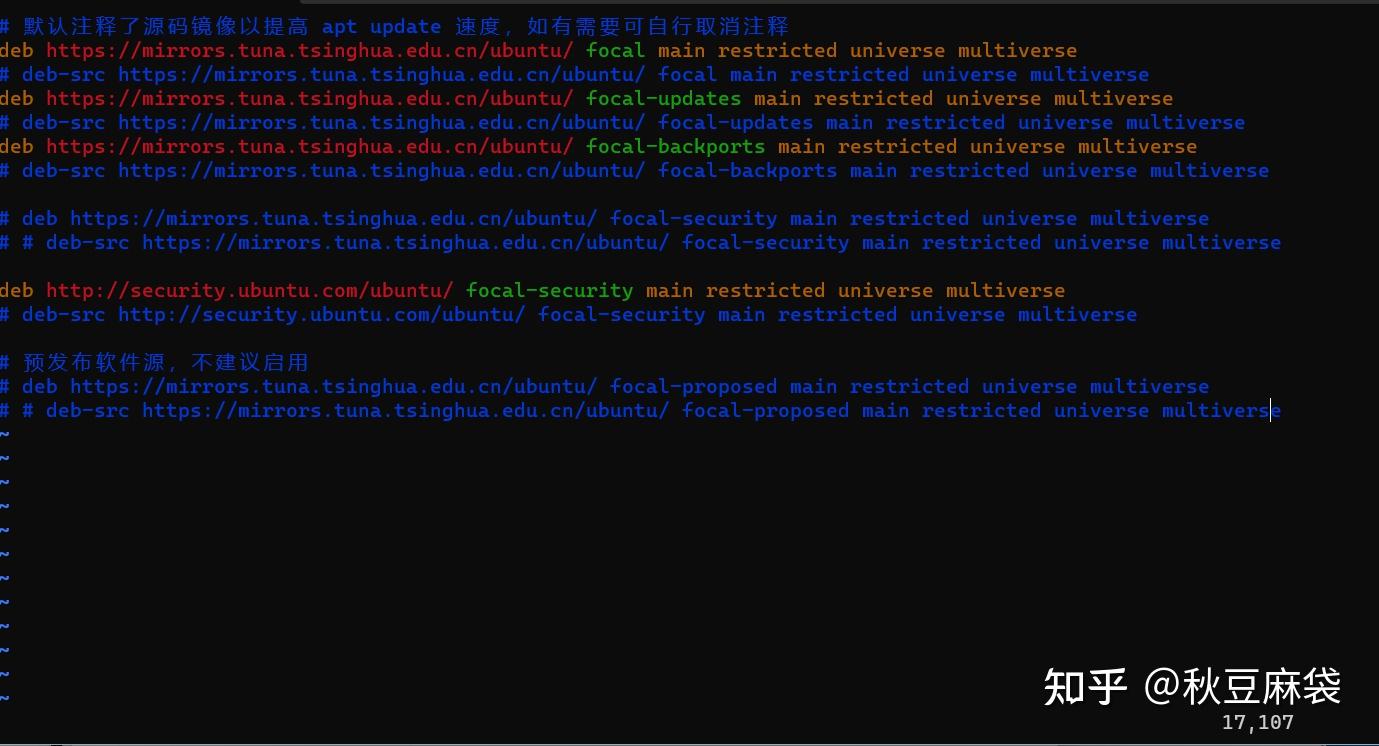

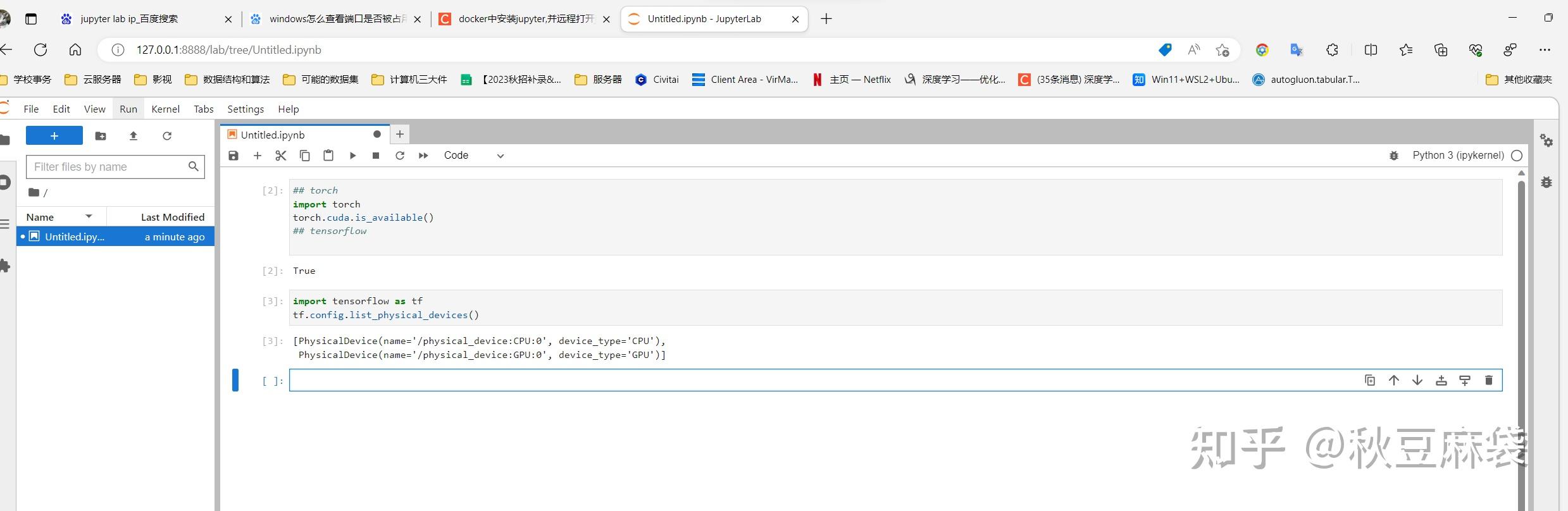

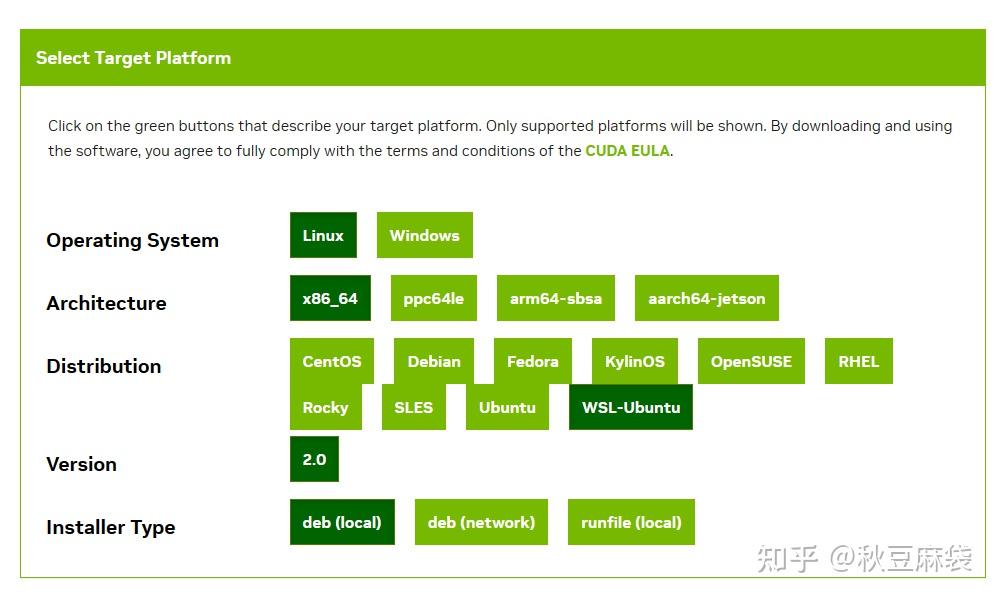

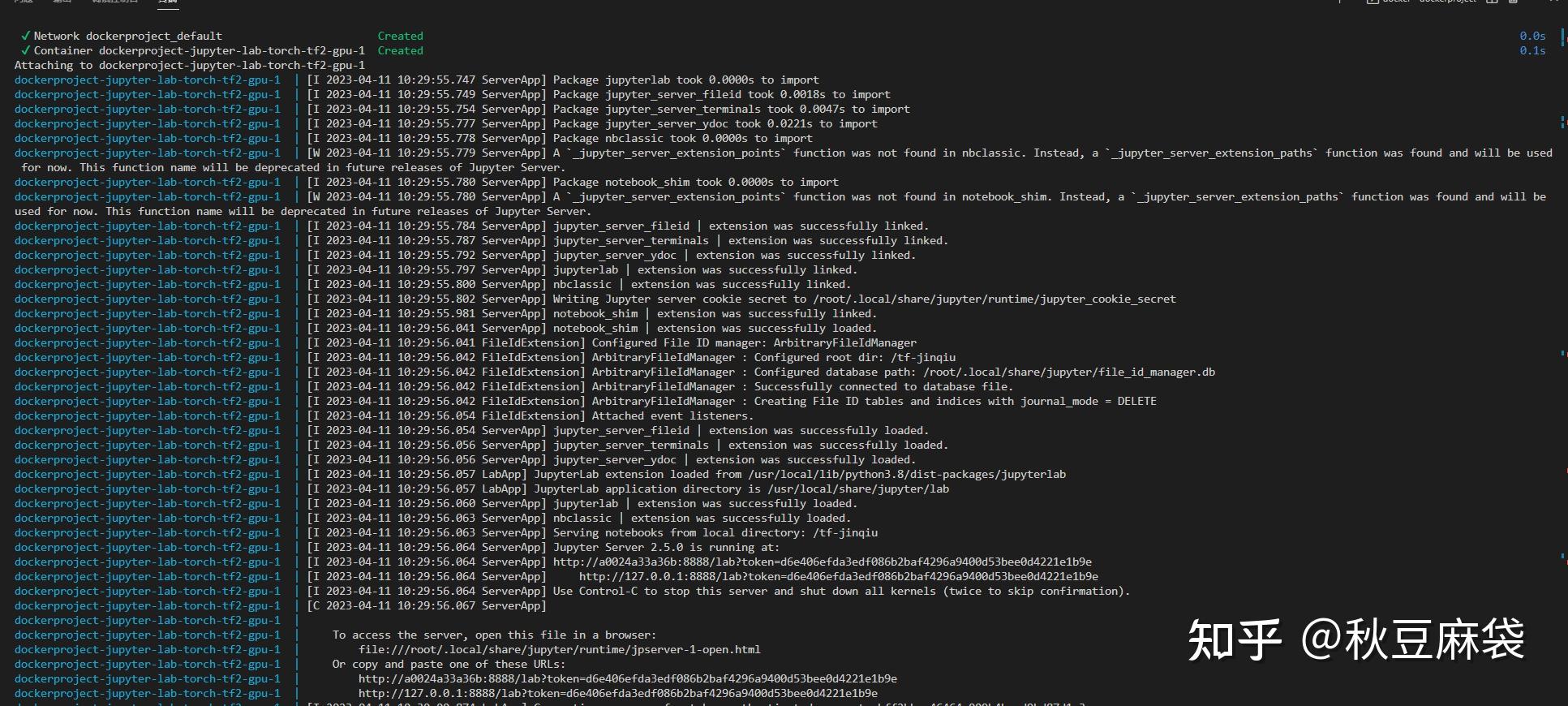

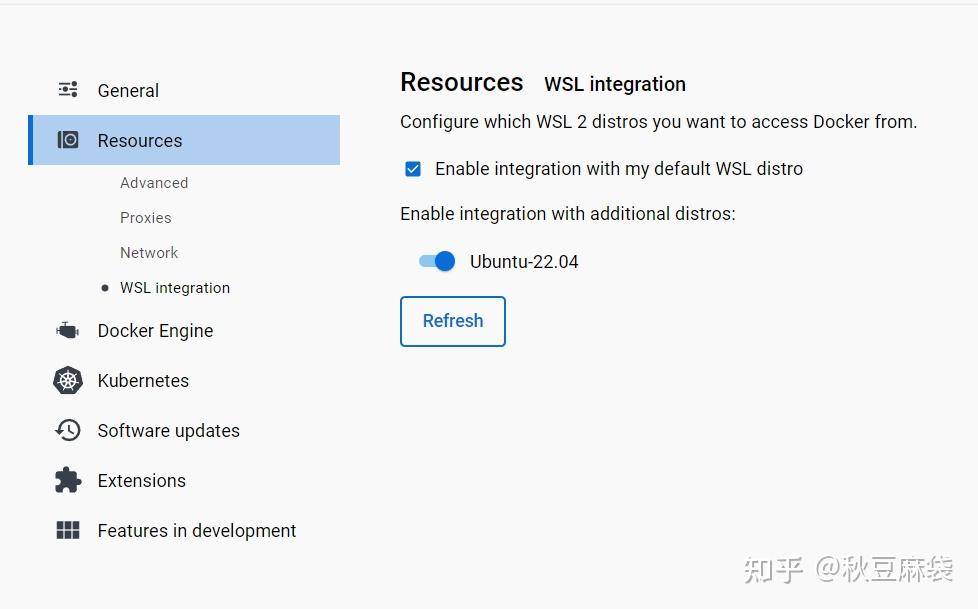

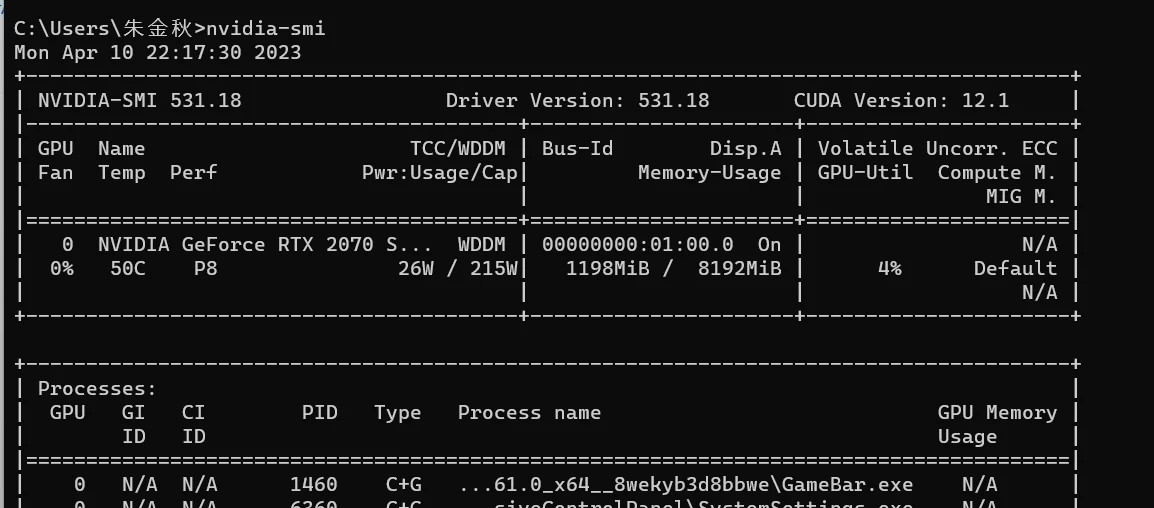

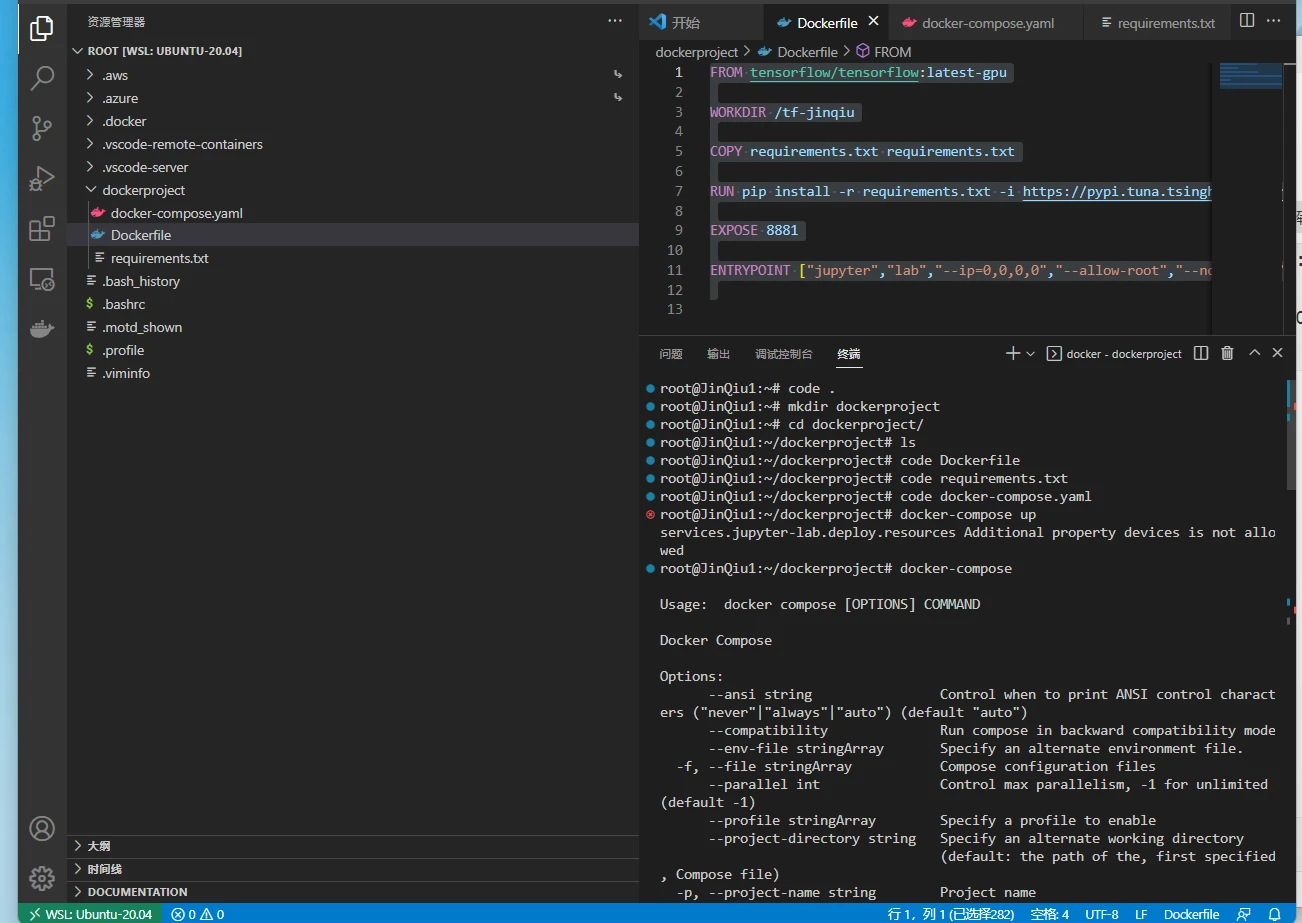

2023 WSL Docker gpu tensorflow gpu pytorch gpu

2023 WSL Docker gpu tensorflow gpu pytorch gpu

2023 WSL Docker gpu tensorflow gpu pytorch gpu

2023 WSL Docker gpu tensorflow gpu pytorch gpu

2023 WSL Docker gpu tensorflow gpu pytorch gpu

2023 WSL Docker gpu tensorflow gpu pytorch gpu

2023 WSL Docker gpu tensorflow gpu pytorch gpu

2023 WSL Docker gpu tensorflow gpu pytorch gpu