Multi Head Attention Code

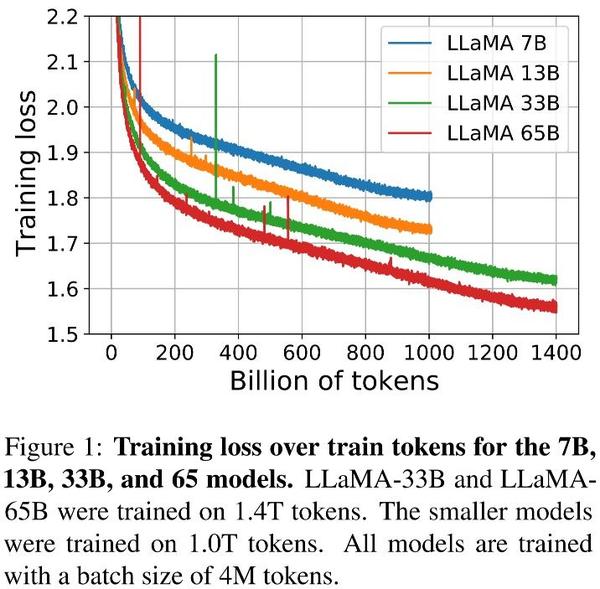

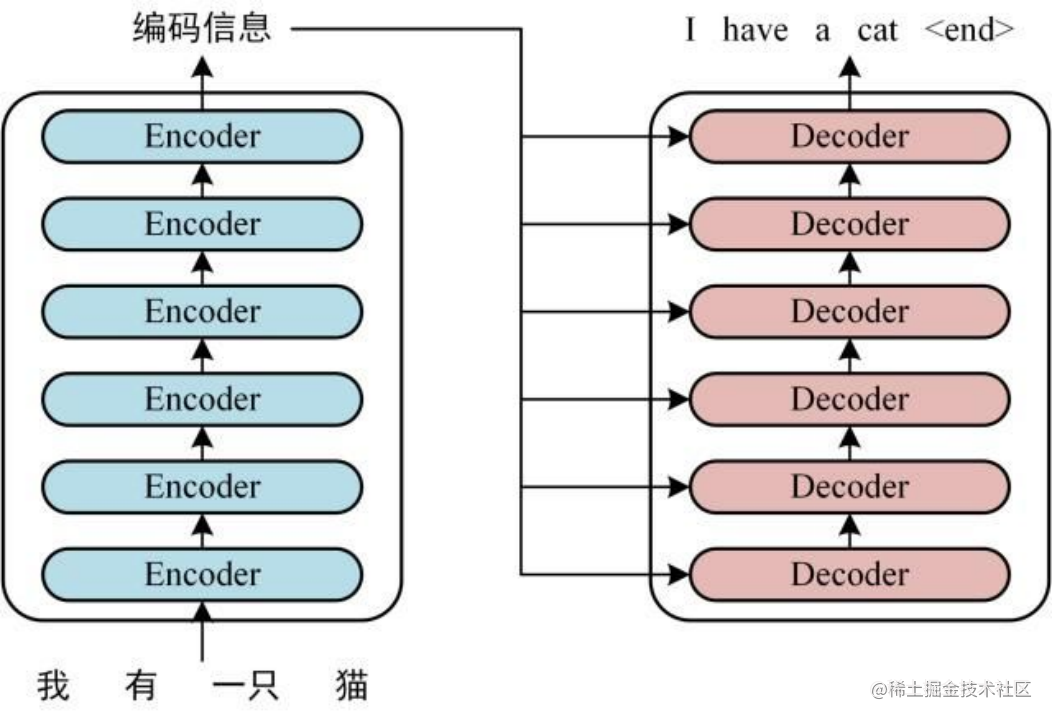

Llama open and efficient foundation language models Patch level . transformer transformer .

Multi Head Attention Code

May 9 2022 nbsp 0183 32 1 DM 2 3 Sk5 ip . transformer part 2 Gpt gpt2 .

LLaMA Open And Efficient Foundation Language Models

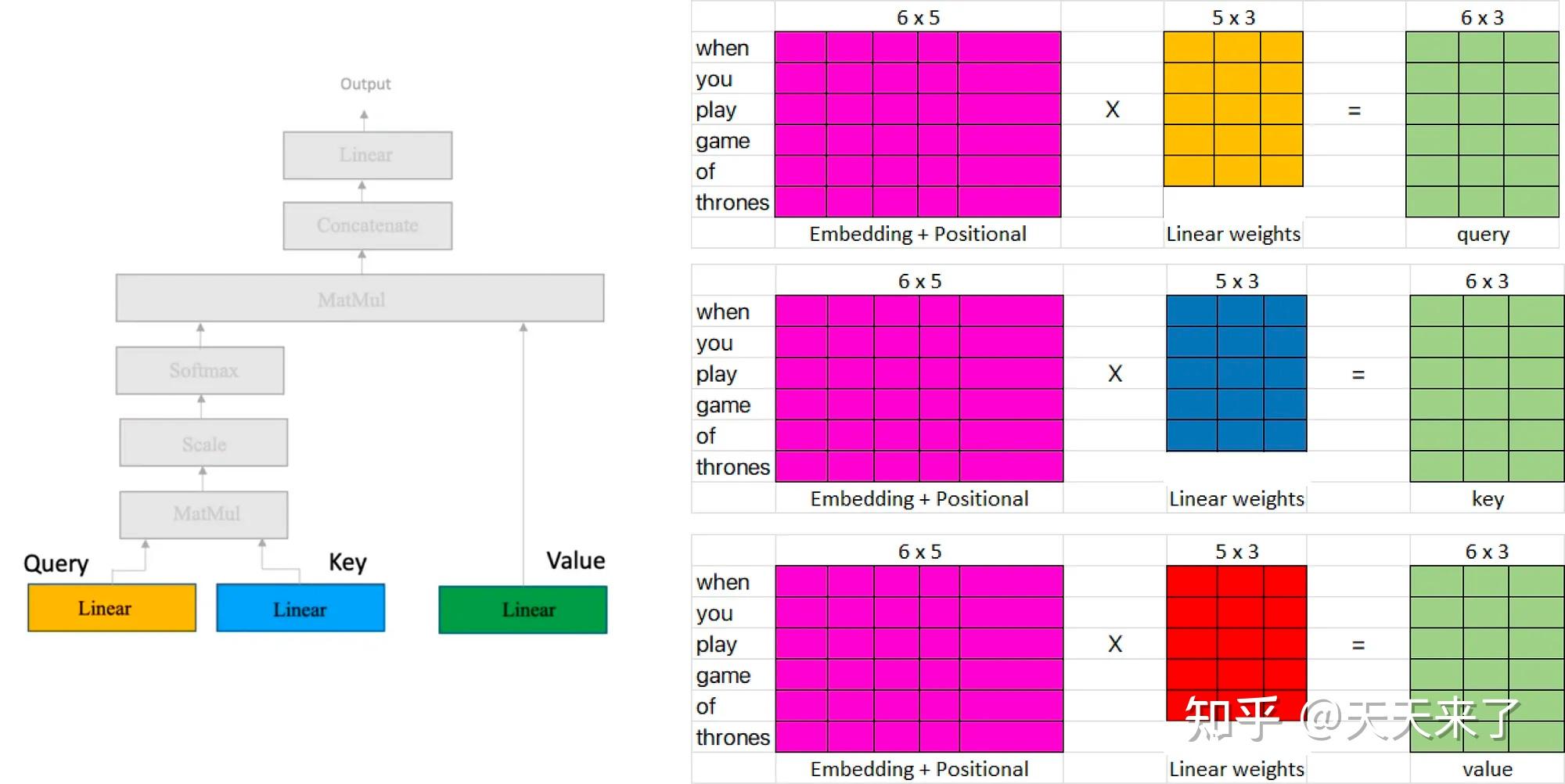

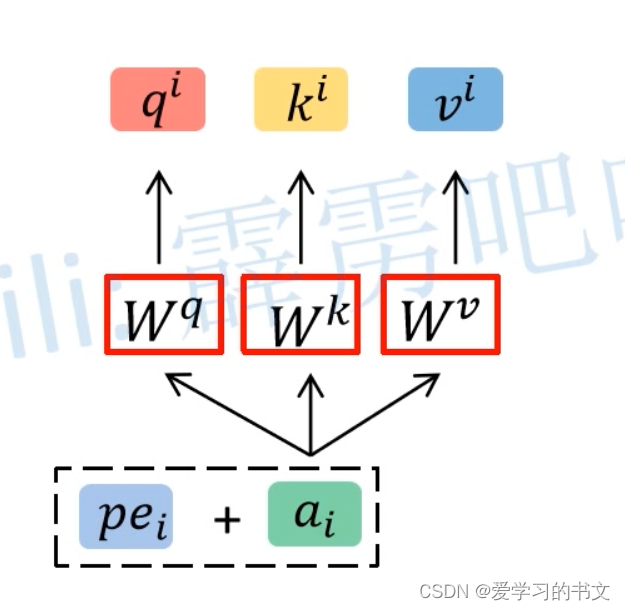

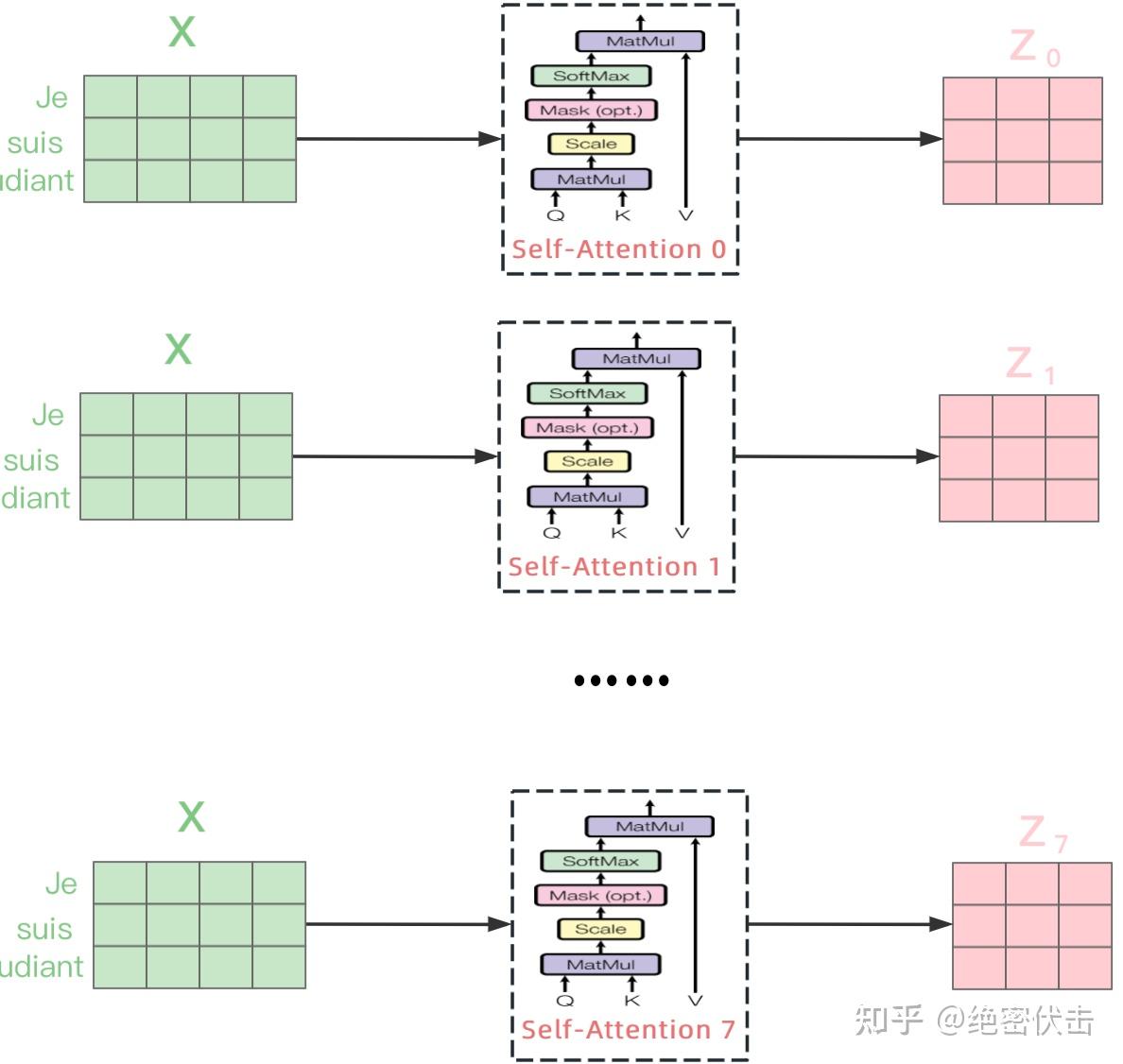

Mar 1 2022 nbsp 0183 32 Attention Multi Head Attention token 先说结论: SM80架构上的Multi-Stage实现一定程度上的依赖于GPU硬件层面的指令级并行(Instruction-level parallelism,缩写:ILP),而SM90架构上的Warp Specialization实现则是完 …

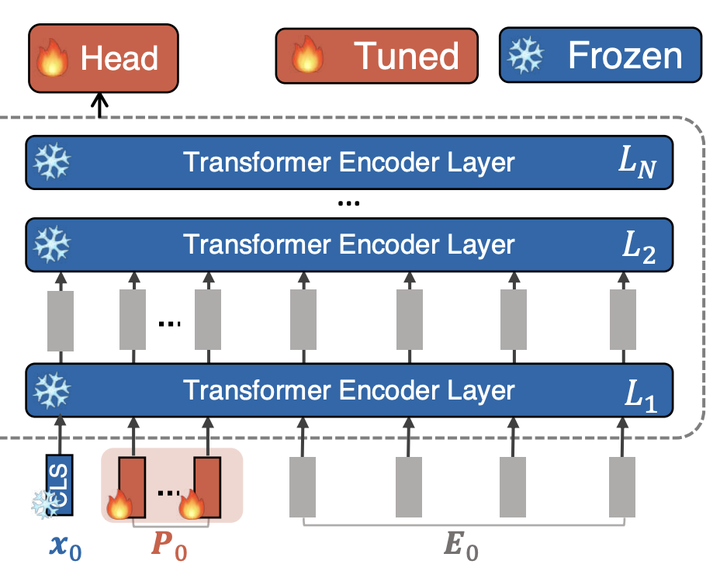

Visual Prompt Tuning ECCV 2022

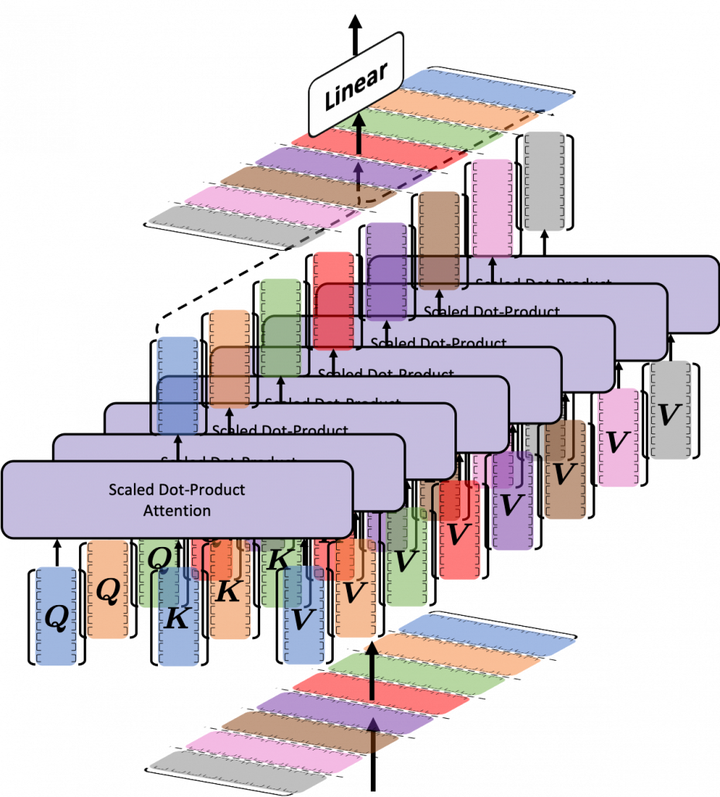

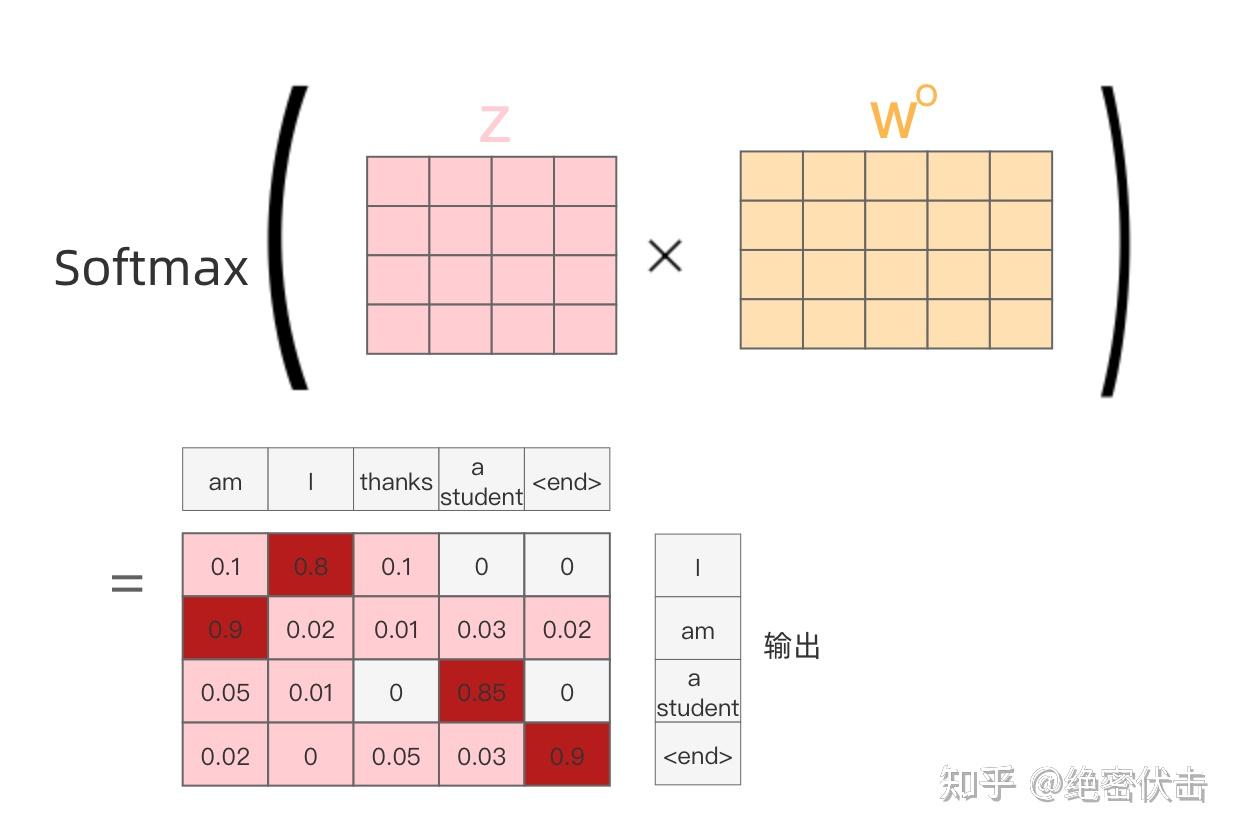

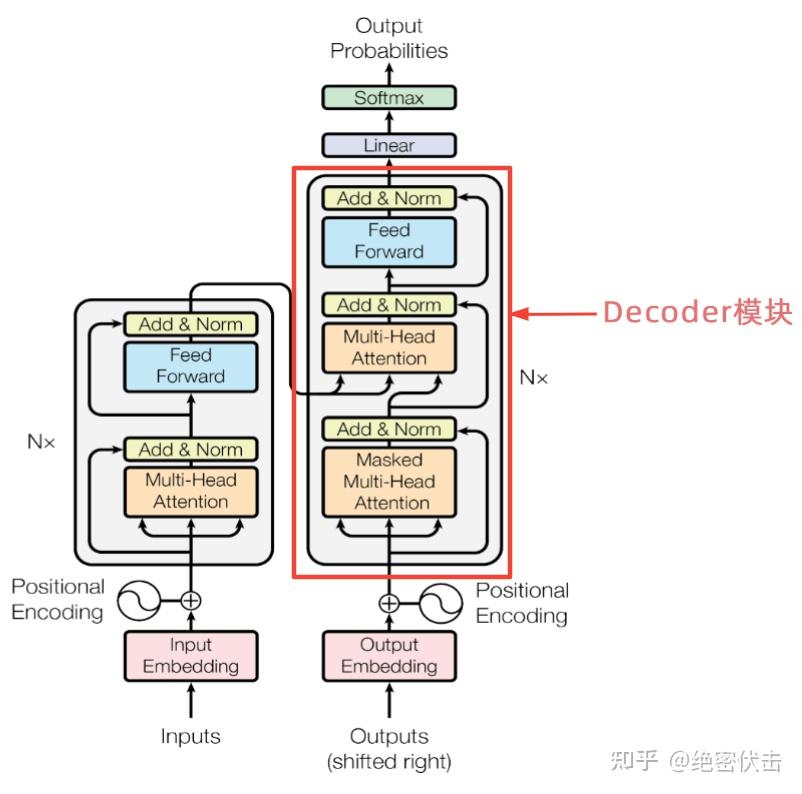

Multi Head Attention CodeMulti-head attention allows the model to jointly attend to information from different representation subspaces at different positions. 在说完为什么需要多头注意力机制以及使用多头注意力机制的 … Aug 2 2021 nbsp 0183 32 Bonjour J ai un doute sur la bonne fa 231 on d 233 crire tunnel de refroidissement multiproduit haute cadence doit on 233 crire en un seul mot quot multiproduit quot ou

Gallery for Multi Head Attention Code

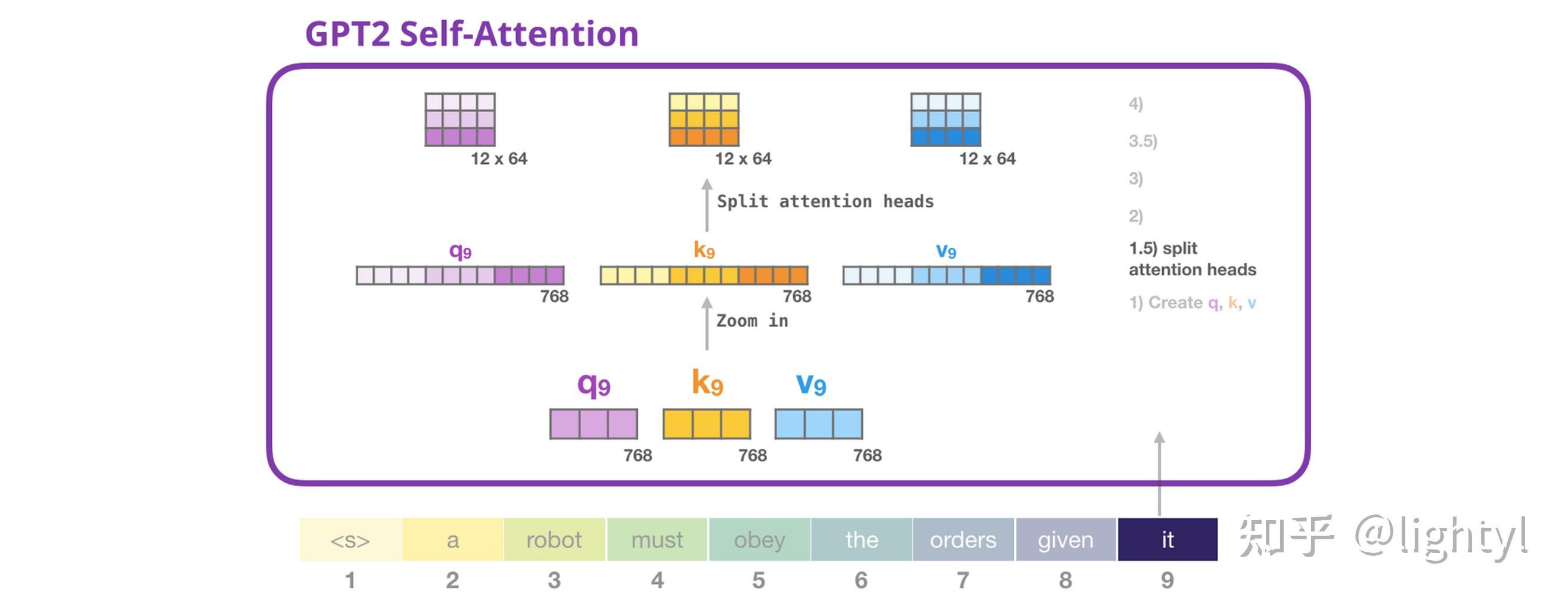

GPT GPT2

Patch level

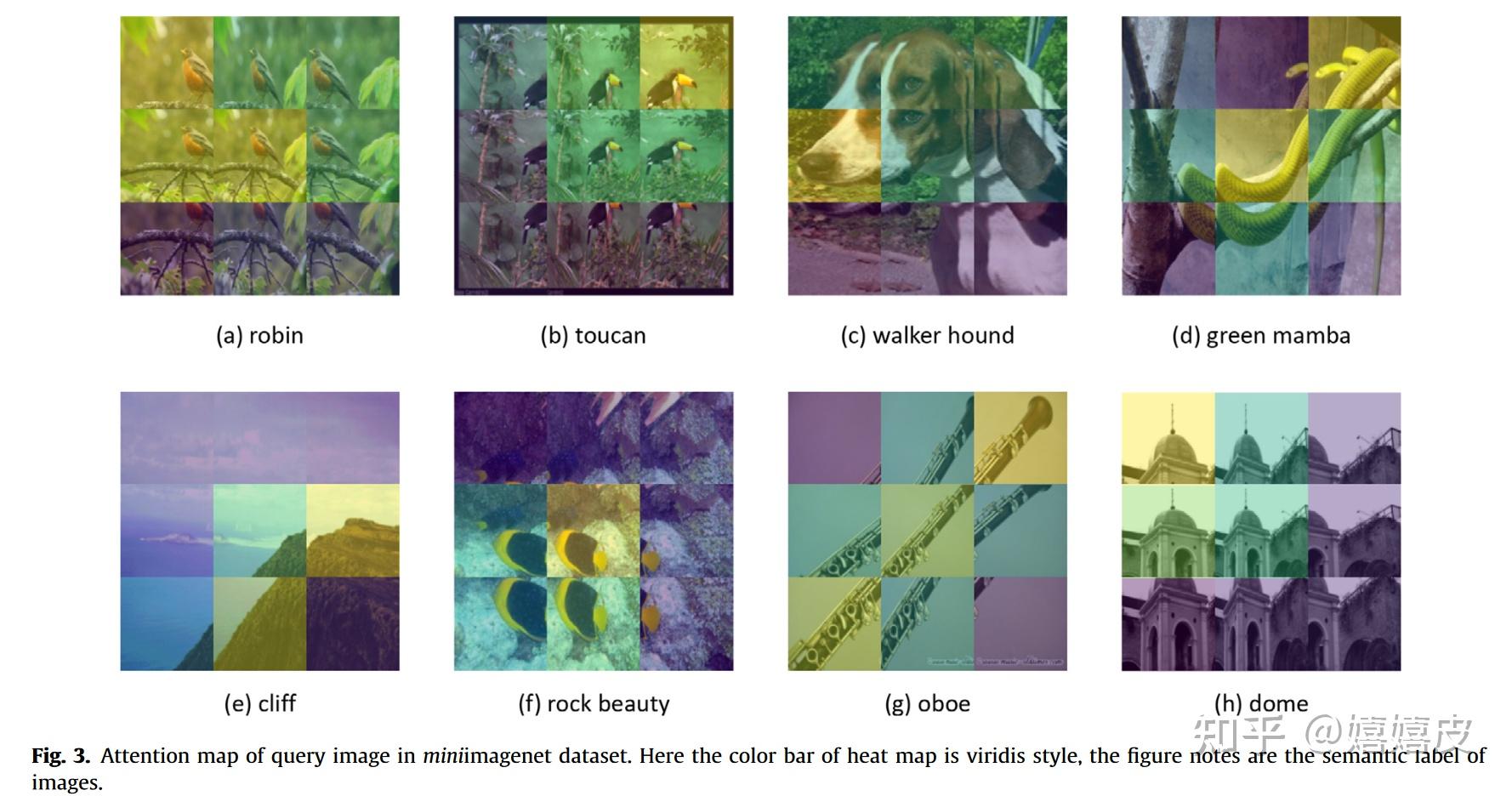

Self Attention Visualization

Transformer Part 1

SK5 IP

Transformer Transformer

Transformer Transformer

Transformer Transformer

Multi head Attention Structure Download Scientific Diagram