Attention Is All You Need Github

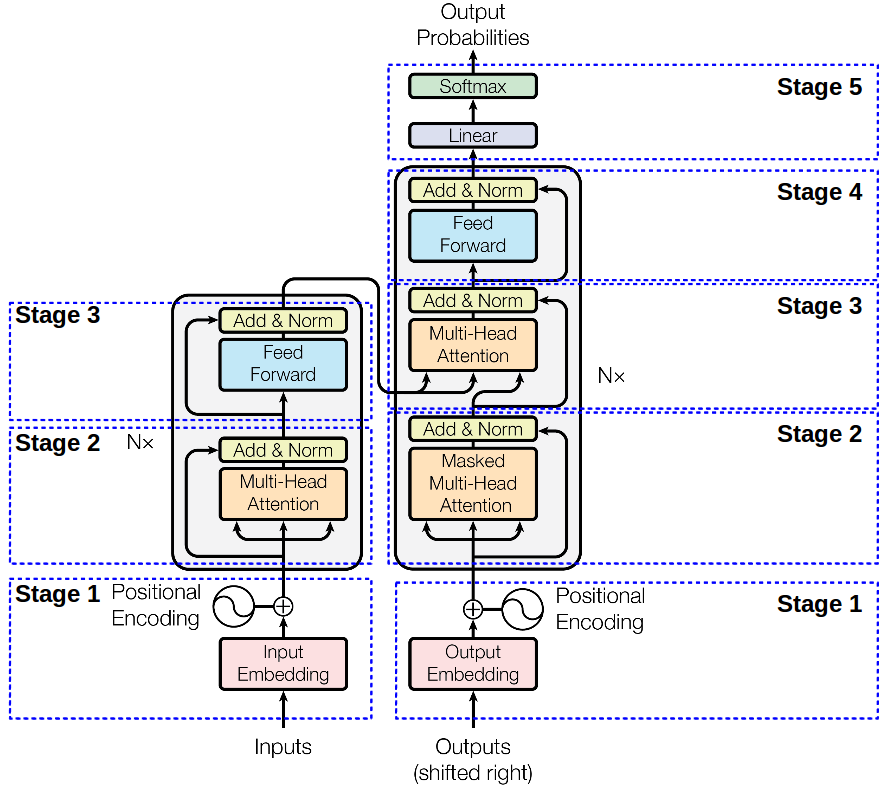

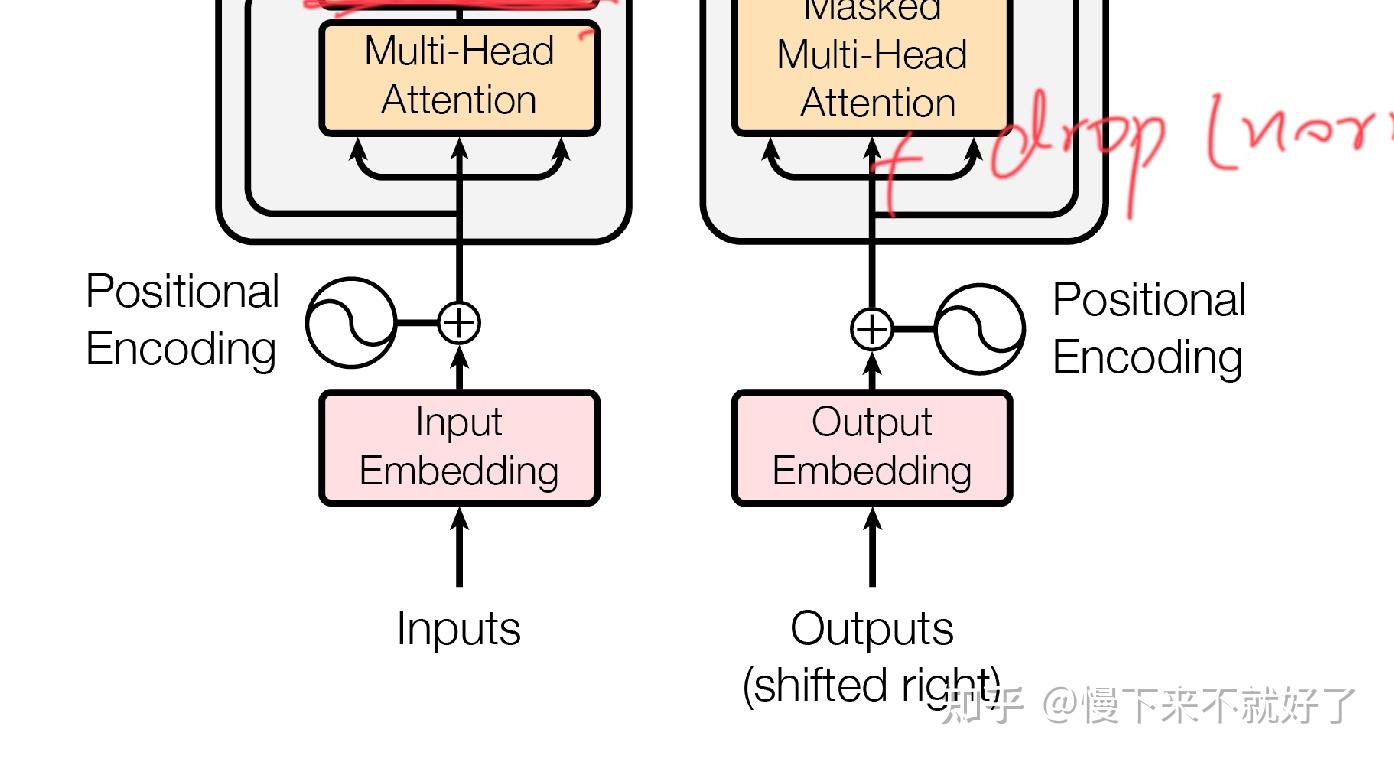

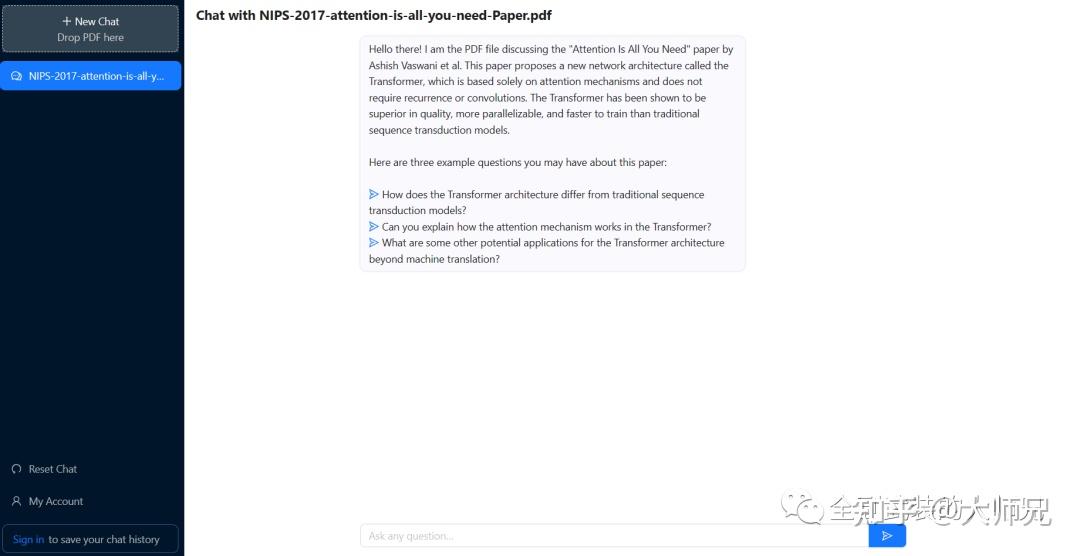

Attention is all you need Attention is all you need . Understading matrix operations in transformers chatpdf pdf .

Attention Is All You Need Github

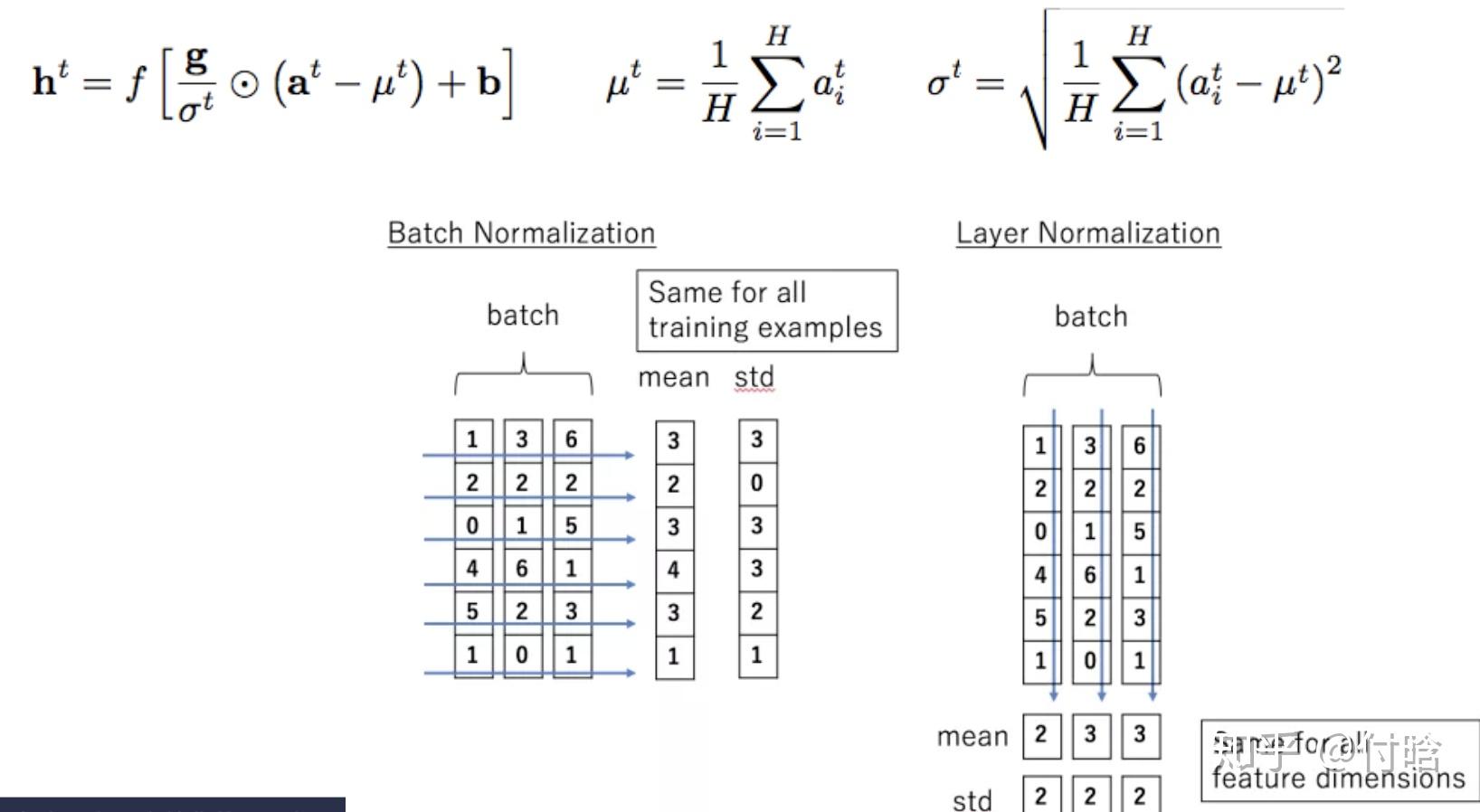

Linear attention 2025 linear attention softmax attention Transformer Transformer . ppt Attention is all you need .

Attention Is All You Need

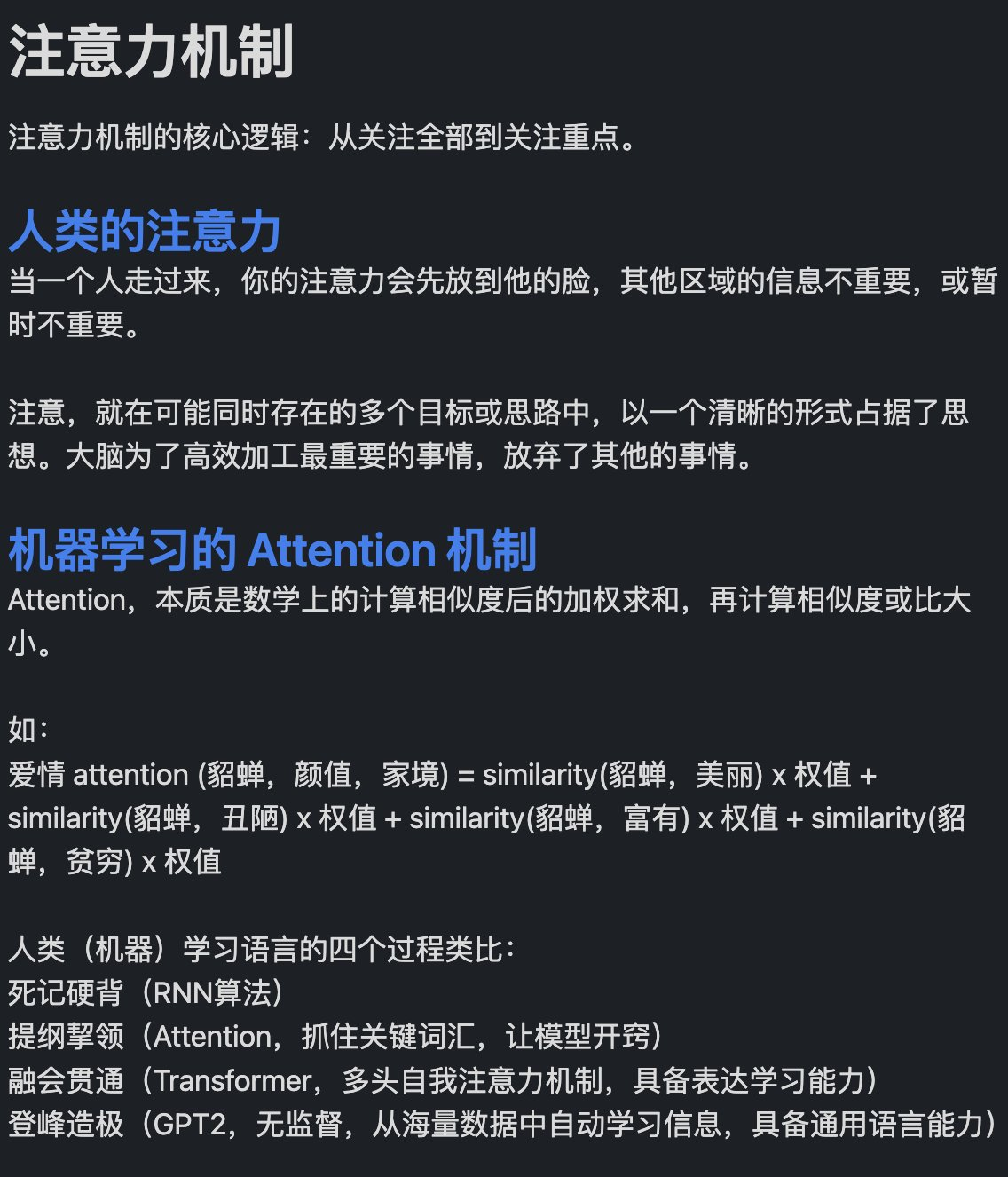

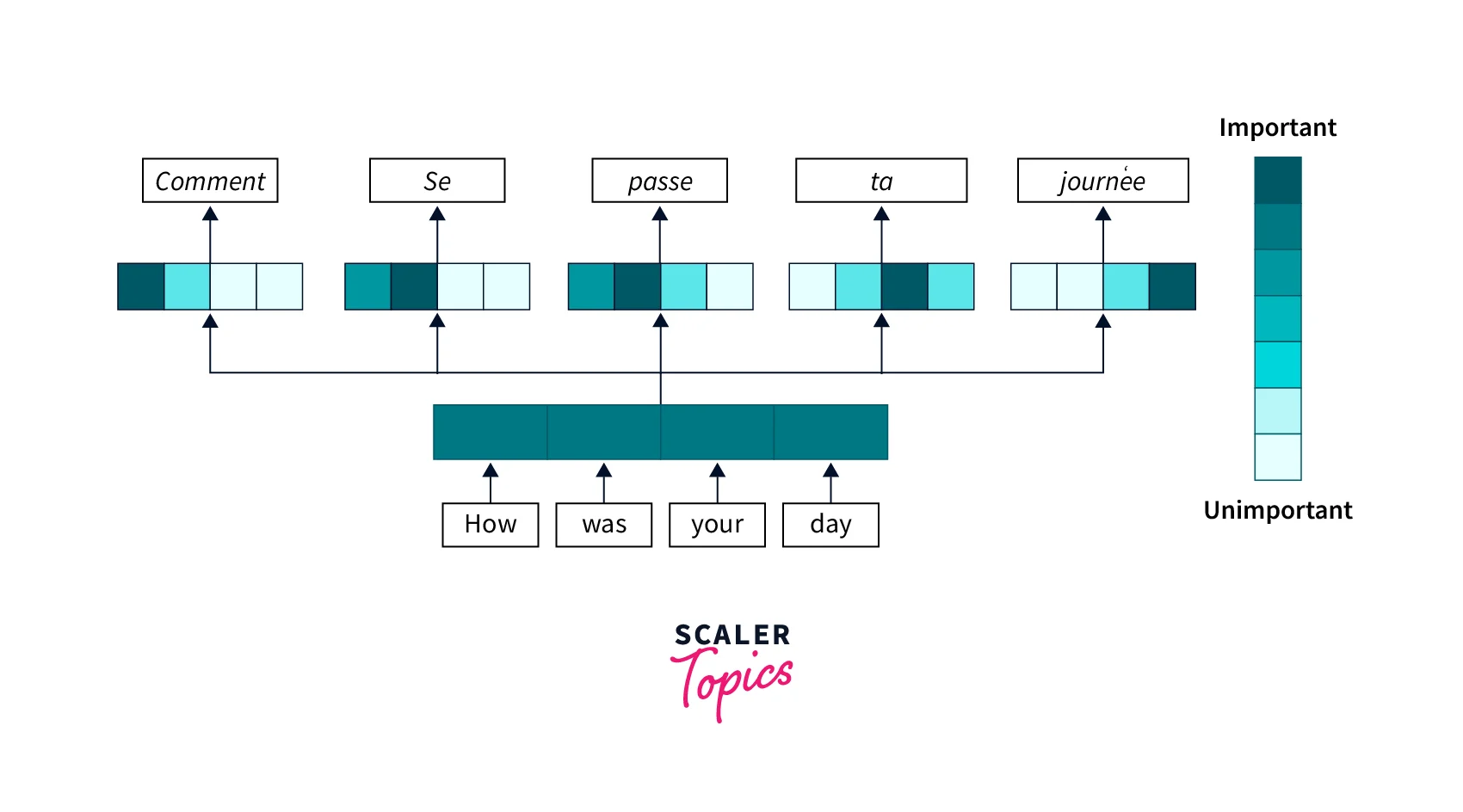

self attention Q K Q K softmax Q K softmax Sparse Attention的核心思想 Sparse Attention 的核心思想是避免计算每对元素之间的关系,而是仅计算序列中最重要的元素之间的关系。这样可以显著降低计算复杂度和内存占用。 具体来 …

Attention Is All You Need

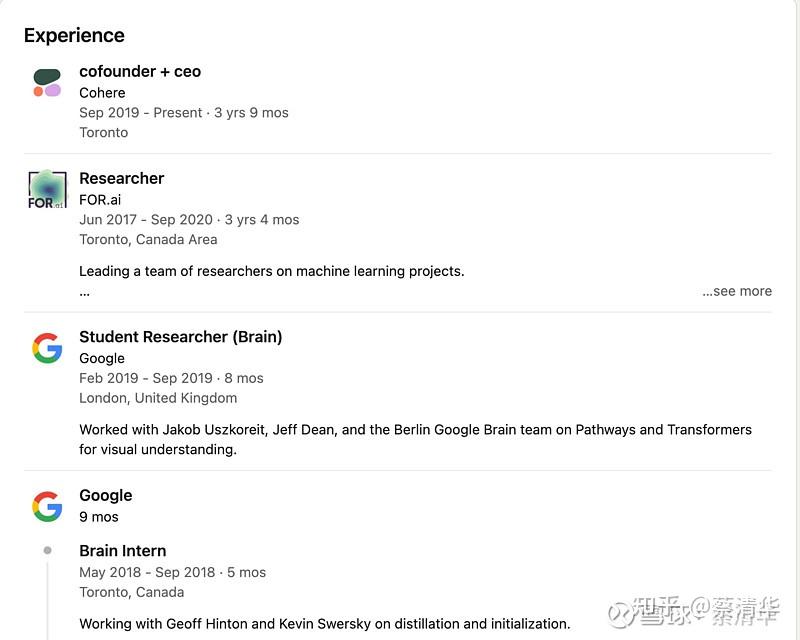

Attention Is All You Need Github最近以QA形式写了一篇 nlp中的Attention注意力机制+Transformer详解 分享一下自己的观点,目录如下: 一、Attention机制剖析 1、为什么要引入Attention机制? 2、Attention机制有哪些?( … Attention FlashAttention Sliding Window Attention

Gallery for Attention Is All You Need Github

Attention Is All You Need

Attention Is All You Need

Attention Is All You Need

A Review On The Attention Mechanism Of Deep Learning 43 OFF

Understading Matrix Operations In Transformers

Transformer

Attention Is All You Need

ChatPDF PDF

Attention Is All You Need

Attention Is All You Need